Archiving and purging old data

In the end, if you want to seriously reduce the effective size of a database (after using all innovations on the infrastructure level) is to move data out of the database on to something else. This is a bit against Oracle’s preferred approach as they propose to hold as much of the application data in the database for as long as possible (I wonder why…)

We could separate all archiving methods into two categories:

- Methods that don’t change the RDBMS representation and just move tables or records to a different location in the same or different database;

- Methods that convert database records into something else and remove it from the database layer completely

Intuitively, the first method is the most simple to implement but with limited results, the second would have the most impact on database size but is often very hard to implement, because of relational dependencies that have to be kept in the application.

In the first category the solution often consists of creating an archival database (typically with less CPU, memory and I/O bandwidth to reduce cost, and mainly using low cost storage such as SATA disk). The data is typically archived periodically (say, every month) so that in between archive runs, the database can be kept read-only. By not changing the database in the meantime, it is sufficient to only take a full backup right after every archive run. If the database is replicated in a certain way to a disaster recovery location, you could possibly get rid of backups completely (but this is a tricky, near-religious discussion I normally prefer to avoid).

If the application structure is simple, sometimes partitioning in combination with Oracle “transportable table spaces” can be used. The oldest partition is then dismounted from the database and either moved to the archive database, stored offline (as a set of files in an archive or backup system) or sometimes even completely deleted.

In terms of application transparency I get different feedback from customers. Some claim that having recent and older table data spread out over more than one database (connected with a database link, or using the service bus layer, or something else) results in some additional application problems – and yes, of course queries on the archive system will run slower than on the primary system – so careful design is key to success. And maybe some inefficient transactions need to be optimized. And maybe some queries cannot deal with read-only data and part of the archive database needs to be enabled for updates. Apart from this, I have not found other reasons why an archive database could not work, but I’m open to expert feedback on this topic).

The second category where you convert database record to, typically, something like XML, or move LOBs out of the database (simple: to a NAS file share, or more sophisticated: in a content management system) is harder to implement but also with (potentially) better end results. Oracle will try to convince customers to stay away from this, and indeed, such implementations are complex and tend to be expensive projects, only suitable for very large environments where the cost of purchasing more and more disk capacity in the end is more expensive than to build an effective archiving platform.

Depending on the chosen method it is sometimes possible to have transparency for end users if they need to access old records. Customers either use one front-end to access both actual and archived data, or there is a separate view into archived data (ideally looking much like the primary application).

Note that some business applications offer archiving modules out of the box (i.e. SAP XML archiving, Archivelink).

EMC has formed a strategic partnership with Informatica, a company that is specialized in database archiving and offering both of the methods described – allowing EMC’s storage technology portfolio to be used in the most optimal way.

Finding “cold” and “hot” data in the database

Most customers I talk to certainly agree that too much “cold” data is just sitting in their database consuming valuable resources, but some have problems finding a starting point. Where is the cold data? What data is never accessed but suddenly needed at quarter-end runs? Which user is writing inefficient queries, that scan full tables to find just a few records of relevant information? What would be the cost versus the benefit of moving this data to something else than expensive, high speed disk?

When EMC came out with Flash drives early 2008 we expected customers to buy this innovative technology like crazy to definitively solve all performance issues once and for all. Due to the high cost back then, they were actually very reluctant, and could often not justify this even for mission critical databases. Therefore at EMC we developed a strategy to get the most result out of minor investments – not just by implementing Flash drives but by implementing an Information Lifecycle Management strategy for databases.

Finding cold data can be done using Oracle standard tools, such as Statspack or Automatic Workload Repository (AWR). These tools give a good indication of performance bottlenecks in the database that could benefit from storage tiering (especially with Flash drives). It does not provide comprehensive details on what kind of partitioning strategy would work or what data is candidate for archiving or moving to lower tiers.

For this, a specialized tool is available (DBClassify from EMC’s partner Zettapoint) that can find trends in access patterns of data on a very granular level (such as a certain column in a table not being used) etc. Running this tool for a couple of weeks often results in new insights in application behavior that database administrators were not previously aware of.

Oracle’s own ILM Assistant can help defining policies that advises administrators when to move data and what data within the database, where tools like those from Informatica can move data to an archive database or XML-style archive pool.

Care must be taken that data that seems idle for long periods can suddenly become active (such as during year-end runs) so defining policies is often based on technical as well as business rules.

The total picture

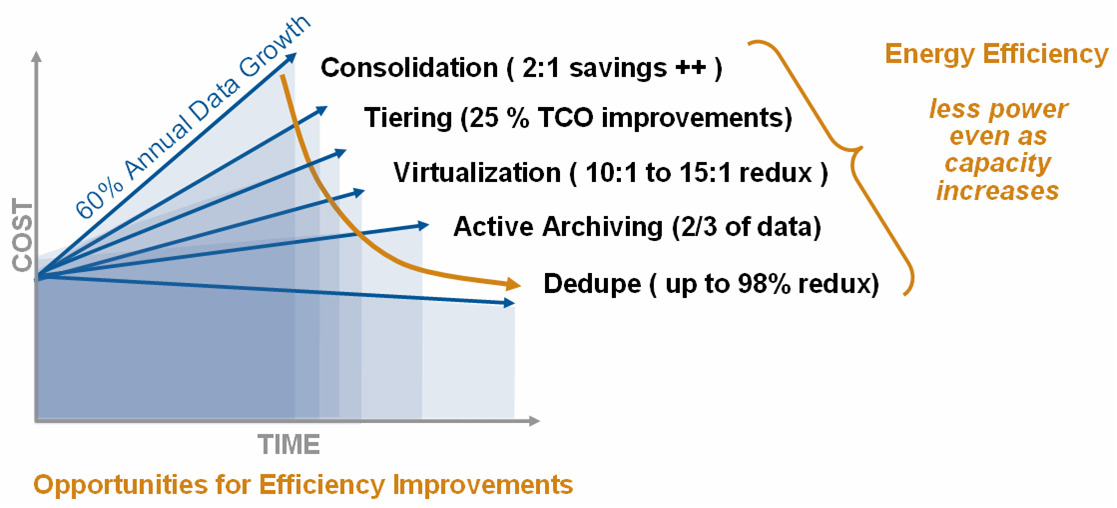

By combining all innovations, tools and management methods into one, we find that it is often possible to get drastic reduction in total cost, with the added effect of improving service levels and performance.

Much of the required technology shown in this picture is already available – with some new ones on the horizon.

With databases that keep growing even in this hard economic period, associated with all the technical and cost issues that go along with this, we will see more and more customers looking at storage and database innovations, to start having more control over their environments.

With databases that keep growing even in this hard economic period, associated with all the technical and cost issues that go along with this, we will see more and more customers looking at storage and database innovations, to start having more control over their environments.

![]()