Before showing my preferred solution for Oracle stretched high availability clusters, first some clustering basics.

Active/passive versus Active/Active clusters

Most clustering software is based on active/passive scenarios. You have a system (say, a database) that is running on a set of resources (i.e. a server, to keep it simple) and you have another system (a standby system) that is ready to run the system (failover) but is not actually running it at the same time.

An Active/Active system in general means both systems are active at the same time. There is some confusion as this can mean that the standby system is used for other processing (say, A is production and B is standby but currently running acceptance or testing environments).

By my definition, active/active clustering describes a cluster where all cluster nodes (systems) are processing against the same data set at the same time. There aren’t many products that can do this, especially in the database world. One of the few exceptions is Oracle RAC.

Failover in active/passive systems

When for some reason the primary system fails, in active/passive clusters, the standby system takes over and starts processing. Considering a database, you must make sure that the server access a full, consistent set of data to allow the database to run. A missing log or data file can ruin your whole day.

Recovery objectives

Also, you have to make sure it runs against the right data set. Running against an old copy of the data could mean you lose some important transactions (but this is not always avoidable). This is what is described with the terms “Recovery Point Objective” or RPO. If you have to restore the database from tape, and the most recent tape copy can be up to 24 hours old, then the recovery point objective is 24 hours. The actual recovery point can be less than that (i.e. losing less time because you have a less old copy of the data).

The time it takes to restart the system is called “Recover Time Objective” or RTO. If restoring from tape takes 14 hours, recovering the database one hour, and restarting the application servers another 30 minutes, then the RTO is 15.5 hours. This is oversimplified. I typically add to this, the time it takes to detect that you have a failure in the first place, then deciding that you are restarting the system on the alternate location (versus trying to fix the issue and restarting it locally) and then actually restarting it.

You could also define “Recovery Distance Objective” (how far does the standby system need to be away from the primary to not be harmed by the same disaster) and you could have different RPO / RTO / RDO sets based on different sorts of interruption (fire, earthquake, processor failure, user accidentally deleting some data, virus outbreak, security breaches) but I will save that discussion for another time.

As some companies are trying to shrink both the RPO and RTO to a minimum (ideally zero), active/active solutions are an option. In the case of Oracle, there is no RTO (as the cluster will keep running even if a whole site fails) and no RPO (because you work on the same data set and not using an older copy of the data). Of course you need to have synchronous data replication between the locations to achieve this (see my previous post on Oracle ASM for storage mirroring).

For individual transactions, in reality the recovery point and recovery time depend a bit on the application and how Oracle RAC is dealing with connections etc. (you might lose some uncommitted transactions anyway, or some batch jobs get killed and have to be restarted) but let’s keep the discussion simple for now.

In our discussion I will assume an active/active two-node cluster based on Oracle RAC.

Split brain syndrome

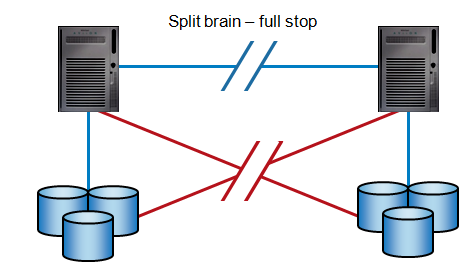

Consider a cluster where system A and system B are connected across a few kilometers. Instead of site A or B going offline, let’s say all communications fail (for example, a digging machine breaks all cables). System A cannot “see” system B any longer, and vice versa. If, for some reason, both systems keep running and accepting transactions, you seriously risk business level consistency. One transaction may be processed on system A, where the next might be processed on system B. So half of the new transactions end up on system A, and the rest on system B. Such situations, called Split Brain Scenario’s, are extremely dangerous, because no single system holds a single version of the truth anymore. Experience has shown that it is almost impossible to merge different business transactions back on to one system again. Even worse, applications might show erroneous behaviour, even when both (now individual) systems are technically consistent. This situation should be avoided at all times.

Preventing split brain

There are certain ways to prevent split brain – but every cluster implementation should protect against it at all cost.

Here are the options.

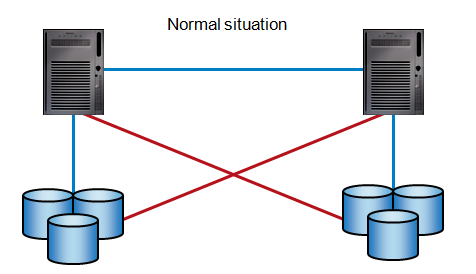

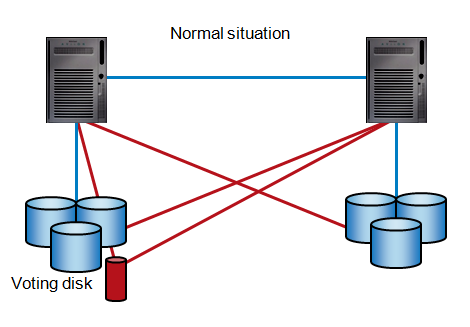

Consider a simple stretched cluster with mirrored servers and storage:

Both nodes actively process database transactions and both write to mirrored storage.

If all communication links fail, both systems just suspend transactions and wait for an operator to allow reprocessing on one of the systems. The operator(s) should be very careful in only starting one system (if they manually start both, you’re in trouble).

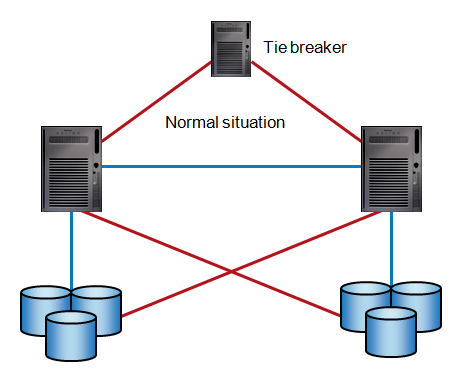

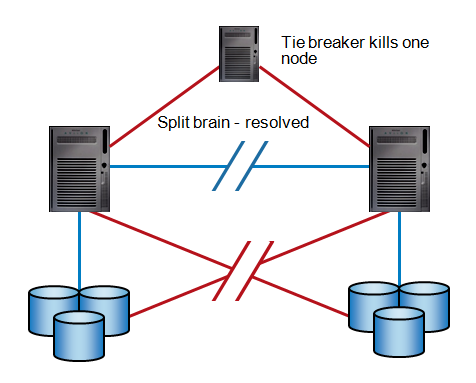

Tie breaker on 3rd location

If all communication links fail between site A and site B, a third location (Site C) decides which one is allowed to keep running and which one is suspended.

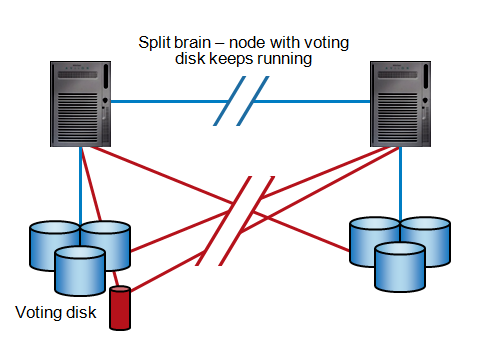

Node Bias

One of the two systems somehow has preference over the other (for example because it holds the locking or voting disk).

If all communications fail, the system with the bias survives.

If the site with bias fails, the other site will go down as well (because it cannot distinguish between a remote site failure and a full link failure)

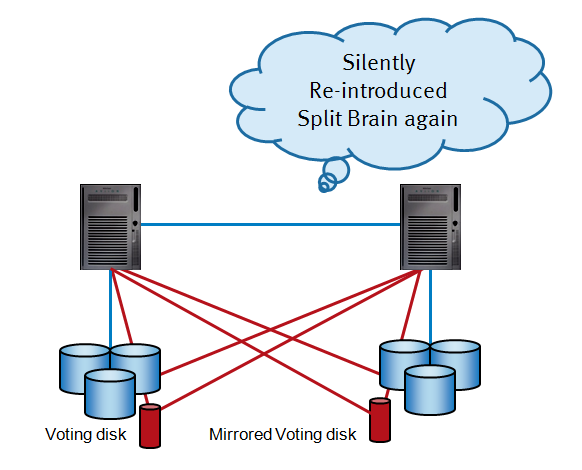

Wrong implementation – mirroring the voting disk

Consider a customer (and believe me, they exist, I have known a few) who decided to mirror the voting disk.

Now if you lose all communications, each site still has access to the voting disk (there are actually two) and will keep running. Prepare for a long working day and hope your company survives.

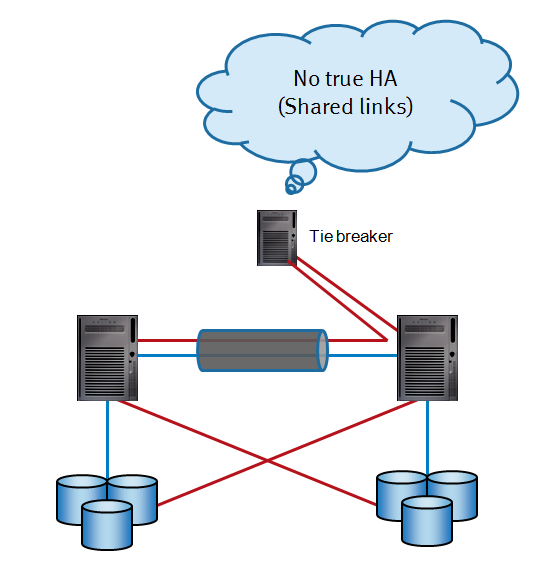

Wrong implementation – sharing communications to the 3rd site

Although not as serious as the previous situation, I have seen people who tried to achieve high availability by implementing the 3rd location – but because dedicated communication links are expensive, some tried to save a penny by re-routing communications from A to C across site B.

Well, go figure it out, if site B goes down, how can the tie breaker figure out if A is still alive? The whole cluster would go down anyway, so the 3rd location is pointless.

Furthermore, the whole idea of dealing with failure scenarios is tricky and cluster software should be very strictly configured. A lot of companies have tried various cluster offerings from various vendors – and some are notoriously complex, hard to setup and sensitive to errors. My experience is that most clusters add a lot of complexity, have their own issues (a problem with the clusterware can bring down the whole cluster, even if there is nothing else wrong), are sensitive to bugs and subtle configuration errors by their administrators, and so on.

As said, the principles of clustering are simple, but the devil is in the details…

In the next post I will describe the EMC solution.

![]()