Today I was giving a training to fellow EMC colleagues on some Oracle fundamentals. One of the things that was mentioned is something I have heard several times before: Oracle is claiming that EMC SRDF (a data mirroring function from EMC Symmetrix enterprise storage systems mainly to provide enterprise disaster recovery functions) cannot detect certain types of data corruption where Oracle Data Guard can. Ouch. The trouble with this statement is that it is half-true (and these ones are the most dangerous).

Now I am not disqualifying Data Guard as a valid database replication tool, and both EMC SRDF and Data Guard have advantages and disadvantages as I have described before, but I hate being confronted with misconceptions (possibly FUD but let’s hope it’s just misunderstanding of technology) about our products, especially if they have been protecting our customers since 1995 against disasters (and I can tell you first hand these DO happen, unexpectedly and in a way never anticipated – and in all of the cases I have seen, SRDF worked as designed and protected our customer from severe data loss).

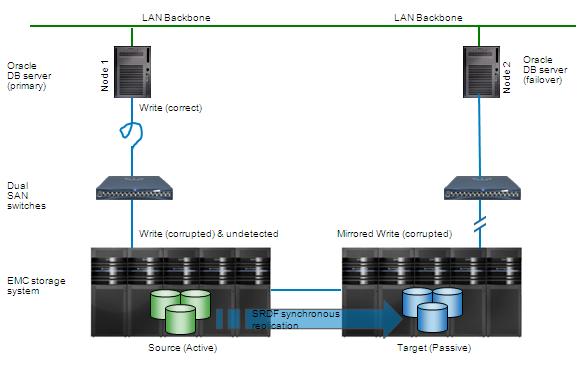

So here is a bit more explanation on this statement. The reasoning is as follows. Consider a primary site with Oracle Database A and EMC Symmetrix A. Symmetrix A replicates (mirrors) the data to the failover site B with standby database server B and Symmetrix B. The data is in sync and everything is working nicely.

Now something happens in the I/O stack (say, flaky HBA or half-broken cable) and a database write is malformed and written with wrong contents to Symmetrix A. Symmetrix A happily replicates the faulty data to site B and the corruption goes undetected (SRDF is a data mirroring tool; so it’s intentionally based on GIGO )

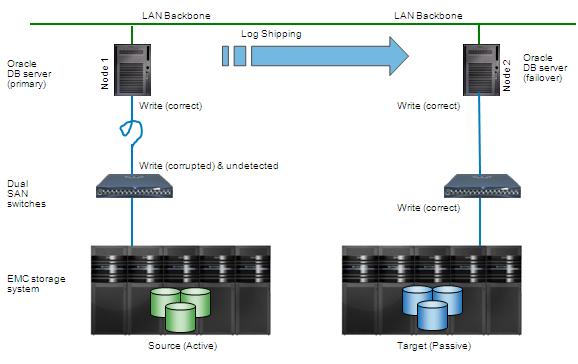

Compare with a situation where Oracle Data Guard replicates from site A to site B by shipping redo and archive logfiles.

As the log shipping mechanism does not involve database writes, the same corruption will mess up the production database A but not the failover database B (because database writes are not mirrored).

Preliminary (but faulty) conclusion: Data Guard is better than SRDF because it prevents you from block corruptions.

Doh…

Any wrong information in that statement? No. It is factually correct. SRDF is not designed to correct or even detect logical corruptions caused elsewhere (for that you have a whole bunch of other tools and methods).

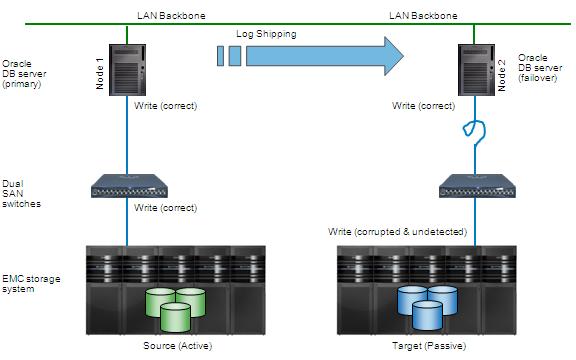

The problem is, that the statement does not tell you the full story. Back to the Data Guard scenario again. Let’s assume for a moment that the corruption caused by broken cables is not happening on the primary, but on the standby site. So the (correct) database write goes to Symmetrix A, and Data Guard sends the (correct) data to database B where its database writer writes (initially correct) data that gets corrupted and written to the storage subsystem B (which can be any type of storage for that matter).

How is Data Guard now going to detect and prevent the corruption from happening? I think it will not do this at all, and the corrupted block will sit happily waiting for the database to fail-over (because of some kind of outage or disaster at site A) and only then the corruption is detected (right at the point when you have other issues to deal with, it’s a disaster, remember?). This can be three months later when all backups and archive logs to recover the corruption might have been expired and overwritten (plus they might have existed in the wrong – now toasted – location anyway). It may be detected earlier (if the standby database is doing database verifies of some sort frequently) but even then it is not Data Guard that detects the corruption, but some manually kicked off verification process.

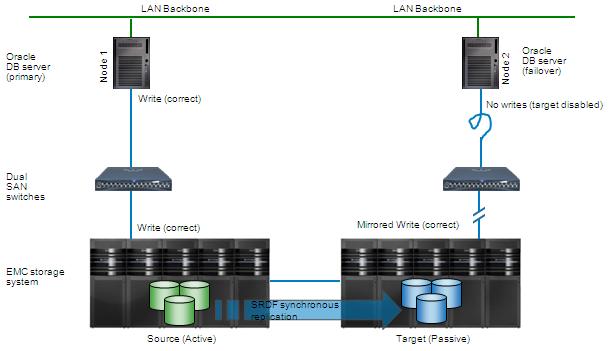

Consider the same scenario again with EMC SRDF. Database A is writing (correct) data to Symmetrix A which is using SRDF to write the (correct) data to database B. As there is no active database writer at site B there can be no corruption (where this *was* possible when using Data Guard).

So my take on this? By using Data Guard you “protect” (quotes intentionally and meant cynical) from one type of corruption but you silently introduce the possibility of another one.

I keep having problems trying to see that as an advantage over SRDF.

![]()

Hi Bart,

Great article by the way! Let’s assume that both technologies have the same pro’s or con’s. Your explanation doesn’t tell how we are able to prevent such a scenario? Are you going to do a follow-up this topic?

Title” How can you prevent or reduce database corruption or keep the impact of it to a minimum?”

regards, AJ.

Hi AJ,

Thanks! Wasn’t planning on a follow-up article as I gave some hints in the earlier article about data integrity… But considering your request I think you have a point, there are situations where I guess both Data Guard or SRDF (or any other replication for that matter) cannot prevent corruption. That said, what we do at EMC is prevent corruption as soon as we receive a write through the front-end ports. After that every disk block is checksummed. But that does not prevent from corruption higher in the stack and that’s where the weakness sits. So I accept your challenge and promise to write something on that. But first let me get some background info on what EMC engineering is up to in that area… 😉

AJ,

in case the corruption occurs on a higher level, I’d adress it there also.

In case of Oracle I recommend taking alternating RMAN backup from both sides. RMAN will detect any corrupt blocks. Then it’s the DBAs duty to fix it.

Martin

Hi,

This is nice receoving another insightful topic or debate after a long time. ………..but there is some buts that is triggering…..When srdf is writing data from symmetrics A to B what is the chances of erronous data written because intermittently falsing hardware. I am not very kind of an Oracle supporter, but I do feel that chances of error creeping in are same in both cases. Now for DG as it writes logs and then a standby db appliez those logs to db, there are high chances that corrupted log wilwl be rejected. An alert will be triggered and DBA can resend a reapply those logs.

Good spot… there is always a chance of data being corrupted during transit from Symm A to Symm B. But you catch those corruptions because every disk block is tagged with a checksum as it is accepted through the front-end ports. So like Oracle can detect corrupted redo logs as they get sent across the IP link, EMC SRDF will apply similar techniques to do the same. For example Symm B can reject the write and ask Symm A for a retransmit. If it keeps sending corrupted data it can decide to drop the link and generate an error. Of course all data is still consistent (just not being updated any longer until the issue is resolved).

Note that it is best practice in EMC to have at least 2 links for redundancy so SRDF might drop one link and keep running on one leg (hopefully this provides enough performance).

Hi

I am a bit late with comments but topic seemed interesting and so here it is anyway.

I don’t think you are making apples to apples comparison between SRDF and DG and the main reason is the confusion between corruption protection and corruption detection, one is proactive the other is reactive, and what DataGuard is for. Oracle DG protects is against data corruption propagation not corruption protection of all types and origins, this is just not possible without hardware assist PI-DIX etc. So if corruption occurred on Symmetrix A it will be propagated to Symmetrix B and there isn’t easy way to validate arrived database block integrity without suspending replication mounting server and running DBVerify.

Going back to your example with DG and broken cable, after enabling db_lost_write_protect at both primary and standby there is a high chance catching those silent corruptions. Provided database was validated at least once then DG should be quite effective detecting most future hardware corruptions. And in the end paraphrasing Russian proverb always “trust but dbvefiry”.

http://docs.oracle.com/cd/E11882_01/server.112/e25513/initparams062.htm#REFRN10268

Hi Tahir,

I’m comparing the two because customers who need DR replication need to make a choice between replication options. It does not typically make sense to have both. So comparing one replication tool (in my case, EMC SRDF) with another (Oracle Data Guard) makes perfect sense to me, even when they use different methods and have very different features and thus it can never be apples-to-apples.

And as said, both might have advantages and disadvantages, but customers need to know the *full* story before making a wise decision.

The lost write is something that EMC prevents as well in the I/O stack using a feature called “EMC Generic Safewrite”. But extra protection in Oracle does not hurt 😉

But even with the feature enabled you can still have corrupted writes. The lost write happens when a large host I/O gets split somewhere in the stack and one of the two half writes makes it where the other does not. That’s different from any write getting garbled in transfer. Two different problems.

Regards,

Bart

Bart,

Just some precisions. Active Data Guard can automatically repair corrupted blocks.

Starting in Oracle Database 11g Release 2 (11.2), the primary database automatically attempts to repair the corrupted block in real time by fetching a good version of the same block from a physical standby database. This capability is referred to as automatic block repair, and it allows corrupt data blocks to be automatically repaired as soon as the corruption is detected. Automatic block repair reduces the amount of time that data is inaccessible due to block corruption. It also reduces block recovery time by using up-to-date good blocks in real-time, as opposed to retrieving blocks from disk or tape backups, or from Flashback logs.

Hth ?

Vincent

Hi Vincent,

Appreciate your thoughts on the matter!

Although that’s a great feature to have, it corresponds to a feature of EMC SRDF, which is allowing reads from the remote system in case of local (multiple) disk failures. SRDF is implemented as remote storage “mirroring” rather than offering a replication “copy”. If you have RAID-1 locally and replicate (either synchronous or asynchronous) to a remote system with RAID-1, then you have effectively 4 disks containing the same data (in the case of async, the most recent writes are in cache).

If you have a local disk failure, the RAID mirror will serve the I/O. In the unlikely event of a double disk failure with both failed disks in the same RAID volume, you would normally suffer some data loss. With SRDF however, the local host reads will be served from the *remote* Symmetrix system. The local database host will not even notice (except that un-cached read requests for that specific volume will have a bit higher latency probably).

Although again you cannot make an apples-to-apples comparison between Data Guard and SRDF, I still think of Data Guard’s remote repair feature very similar to SRDF’s remote read ability even considering they serve slightly different failure situations.

However, it is still my opinion that protecting against corruptions, disk failures, and all other sorts of local data loss for that matter, should be prevented or solved locally and not be (solely) dependent on solutions that were designed for full disaster recovery instead of local data repairs. The choice for a D/R tool should primarily be based on the ability to reliably and consistently fail over (and back!) a complete landscape of applications (i.e. multiple heterogenous databases, applications and non-DB data sets such as plain filesystems) in case a major disaster strikes, and other features are, IMHO, just “Nice to have”.

In particular to the “block corruption repair” feature of DG, I would spend (as a systems admin) more effort in preventing those (by choosing the right platforms & I/O load balancers, and making sure the drivers and firmware are up to date, etc) than having a tool that repairs them (fyi – I have another post on how EMC attempts to prevent block corruptions).

The discussion reminds me of a radio advertorial on Dutch radio (it was probably also advertised in other countries) when Microsoft Vista came out. MS marketed the new OS as having the option to “repair itself” if something fails.

Well, I don’t want that. I want a system that does not fail in the first place 🙂

Best regards and I would appreciate your thoughts…

As Hurricane Sandy swept through the NJ and NY areas I called and texted friends who live and work in the area to see how they where doing. One happens to be an Oracle DBA manager for a large German bank, if your guessing which one, just translate German bank into german. He was doing well and I asked about the Oracle databases he manages. His response was a causal, “Oh, we failed over to xyz a handful of main systems using SRDF, no problems.” We went on to talk about how his dogs were holding up.

Hi Bill 🙂

I know similar stories… A fire extinguisher exploded in a datacenter causing all systems to get full of microscopic dust, all servers went haywire, EMC Symmetrix dialed home and customer failed over with SRDF. Zero bytes lost for the entire production environment – Windows, UNIX, Linux, DB, file servers, Exchange, you name it… (some non-prod was not replicated and – if I’m not mistaken – had to be restored).

Very informative thanks.

Check out this link.. Oracle and the HBA Vendors or working on code within the HBA (Metadata) which will be part of datastream checksum. see this link..

https://oss.oracle.com/projects/data-integrity/

Nice article.

You have a good point of: what if the lost-write happens in the standby and then, when shit hits the fan during a DR, we are really toasted !

It’s addressed by: I could have an automated refresh of standby every week. Also, if I/O happens again on the same block in Primary, MRP will know if that block has data divergence, i am sure, there’ll be an error in the standby alert log.

But, I’d still go with DG over SRDF. Straight-forward reason.

The moment a lost write happens in my prod, I will know that because a ORA-752 is triggered in the standby.

And I care more if the lost write is in prod, than if the lost-write happens in standby. And I want to know it IMMEDIATELY. Because it’s basic CYA, as a DBA 😉

Thanks

@JCnars:

I see your point 🙂

A few comments:

1) So you rely on a D/R tool (Data Guard) to prevent from *local* data corruptions. It’s like using a screwdriver to hammer a nail. I would prefer to have DG (or any other DR tool) do what it’s supposed to do (protect all of your data against datacenter outages) and leave corruption protection to other tools. Like Bob C. above here mentioned re the HBA vendors working with Oracle.

2) If you have a lost write on the local, how is the standby aware of this? I don’t think this happens. The claim Oracle people make is only that local corruptions like lost writes are still possible – just that they don’t make it to the standby. Plus a few features that can repair local lost writes from data that was written correct on the standby.

3) How would you do the automated refresh? Full sync? Not feasible for large datasets plus you need to shutdown/restart the standby plus during resync you are not DR-capable?

4) I can think of a few situations re corruption that DG will not protect you from at all (future blogpost maybe)…

All by all (and admitted I might be biased working for EMC) I regard Data Guard as a tactical DB admin DR tool that replicates log data from *one* database. SRDF (or equivalent SAN replication tools from other vendors) protect (in a multi-app consistent way) *all* data regardless OS, DB & host HW, and offer one point of control for everything, therefore are a strategical (CxO level) tool.

But from a DBA perspective, I can see the attractiveness of Data Guard (not being dependent on the storage guy, nice to play with DG options, and frankly, DG has some benefits over SRDF (quicker failover and no need to re-create remote snapshots frequently).

Thanks for your interesting comment,

Bart

Hi Bart, Thanks for the response. Our shop is 1 primary with 2 local standbys and a 3rd DR standby

1) If I have a swiss knife, i want to use all that it provides.

2) http://www.oracle.com/technetwork/database/availability/lostwriteprotection-1867677.exe

3) We have OCFS2 mounted on both primary and the 3 standbys. Very simple scripting.

It may be just me, but exactly because DG is designed (and tightly integrated) with the Oracle engine and SRDF can be used for, say, Microsoft Exchange, I would go with DG

Sure, It’s my pleasure to come across your blog and point of view !

JCnars,

Like this discussion, good feedback!

1) Good point, if you decide to use a tool anyway then why not use all useful features

2) Will check it out sometime (today is last day of my short holiday, let’s see if I can give it a shot sometime next week)

3) I’m not sure I understand how you do this:

Let’s say you have an undetected corruption on the standby somewhere and you want to do periodic refreshes to overcome this (not really prevent but you’ll just overwrite the corruption if there is any).

So whatever tool or script you implement has to check all datablocks of all datafiles. Not sure how OCFS2 is going to do this?

I would expect the refresh goes something like this:

a) stop (shutdown) standby

b) overwrite standby from some source dataset (i.e. backup or remote rsync or something like that), i.e. this is almost like re-creating the standby DB from scratch

c) recover database, open in maintenance mode and restart Data Guard

d) apply (using DG or otherwise) all updated archive logs

e) re-enable Data Guard replication

Especially on the remote this procedure can take some time… During which you’re not protected?

If you have a smarter way I’d like to know 🙂

(but remember the rules: you need to check every disk block somehow for corruption during the refresh)

Hi Bart and jcnars

I would like to point out in 11gr2 with active DG oracle will monitor and correct any lost writes on both Primary and Standby DB. I verified it on page 11 of the following PDF.

http://www.oracle.com/technetwork/database/availability/maa-datacorruption-bestpractices-396464.pdf

Hi Isingh,

The document also says it will repair blocks as soon as the corruption is detected. That means you have to detect it first. It’s not specified under what conditions this does or does not happen. Also, “lost writes” is only one root cause for data corruption. There are many more reasons why things can go wrong.

Note that EMC has provided lost write protection for a long time. If lost writes cannot happen, you don’t have to fix them afterwards either 😉