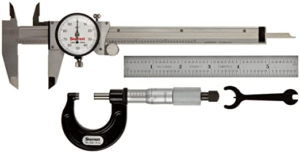

In a previous post, I introduced CRIPS – a measurement of the processing power of a CPU (core) based on SPEC CPU Integer Rate.

In a previous post, I introduced CRIPS – a measurement of the processing power of a CPU (core) based on SPEC CPU Integer Rate.

The higher the CRIPS rating, the better a processor is in terms of performance per physical core. Also, the higher the number, the better the ROI for database infrastructure (in particular, license cost) and the better the single-thread performance.

The method can also be applied in server sizings – if we know how much “SPECInt” (short for “SPEC CPU 2017 Rate Integer Baseline”) a certain workload consumes, we can divide it by the CRIPS rating for a certain processor, and find the minimum number of cores we need to run the workload at least at the same performance.

One problem remains:

How do we measure the amount of SPECIntRate a workload consumes?

The typical OS tools like SAR/SYSStat, NMON, top, etc. do not give us this number, only the percentage CPU busy.

In this blogpost I will present a method to calculate actual performance using standard available OS metrics (CPU busy percentage).

![]()

If you need to generate lots of I/O on an Oracle database, the de-facto I/O generator tool is

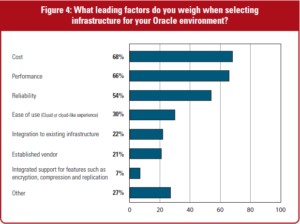

If you need to generate lots of I/O on an Oracle database, the de-facto I/O generator tool is  vious post, we discussed the current state of many existing Oracle environments. Now we need a method to accurately measure or define the workload characteristics of a given database platform.

vious post, we discussed the current state of many existing Oracle environments. Now we need a method to accurately measure or define the workload characteristics of a given database platform. In the previous post, I announced

In the previous post, I announced