Does this story sound familiar?

The end users of a database application start complaining about poor system response and long running batch jobs. The DBA team starts investigating the problem. DBA’s look at their database tools such as Enterprise Manager, Automatic Workload Repository (AWR) reports, etc. They find that storage I/O response times are too high (such as an average of 50 milliseconds or more) and involve the storage team to resolve it.

The storage guys, in turn, look at their tooling – in case of EMC this could be Navisphere Analyzer, Symmetrix Performance Analyzer (SPA) or similar tools. They find completely normal response times – less than 10 milliseconds average.

The users still complain but the storage and database administrators point to each other to resolve the problem. There is no real progress in solving the problem though.

So what could be the issue here?

Performance troubleshooting is a complex task and really there could be all kinds of reasons why this situation happens. But in my experience with customers, sometimes the first step is a real easy one: finding out where the real bottleneck is.

In a lab test I have done a while ago, I collected some performance statistics using the standard Linux tools, here I will show how you can use it to tell the difference between I/O wait on the host (Linux) system versus waits on the storage system.

First of all, the command to show IO statistics on Linux is “iostat”. To get more details on response times, queue depths etc, use

iostat -xk <interval> <disks>

So if you want to get stats for disks /dev/sdb and /dev/sdc with an interval of 2 seconds, use:

iostat -xk 2 /dev/sd[bc]

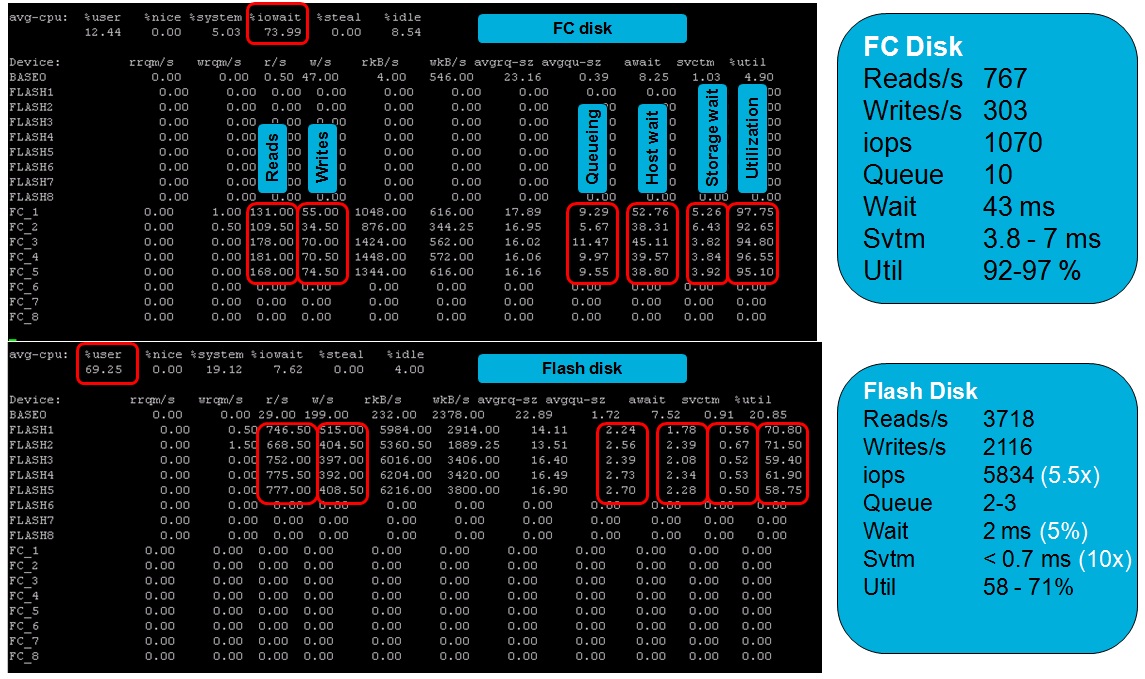

In this picture with screenshots you can see the output of an Oracle database server running a Swingbench benchmark test:

Note that I used a custom script to alter the Linux device names so that they show the Oracle ASM Volume names instead of the Linux device names – to make it more usable for the lab test I was setting up.

Note that I used a custom script to alter the Linux device names so that they show the Oracle ASM Volume names instead of the Linux device names – to make it more usable for the lab test I was setting up.

If you look at the upper half of the picture you can see activity on the Fibre Channel disks (LUNs) FC_1 through FC_5 (this is what made up the Oracle ASM diskgroup for this database). The system was IO bound (you can see almost 74% IO wait). Now look at the columns “avgqu-sz” (Average queue size), “await” (average wait in the queue) and “svctm” (service time).

In the lower half you can see what happened after moving the database to Enterprise Flash disks, but more on this in a future post

Service time (storage wait) is the time it takes to actually send the I/O request to the storage and get an answer back – this is the time the storage system (EMC in this case) needs to handle the I/O. It varies between 3.8 and 7 ms on average.

Average Wait (host wait) is the time the I/O’s wait in the host I/O queue.

Average Queue Size is the average amount of I/O’s waiting in the queue.

A very simple example: Assume a storage system handles each I/O in exactly 10 milliseconds and there are always 10 I/O’s in the host queue. Then the Average Wait will be 10 x 10 = 100 milliseconds.

In this specific test you can verify that storage wait * queue size ≈ host wait

A database operating system process will see the Average Wait and not the service time. So Oracle AWR, for example, will report the higher number and storage performance tools will see the lower number. There is a big difference and I’ve experienced miscommunication on this between database, server and storage administrators on several occasions.

Make sure you talk about the same statistics when discussing performance problems!

![]()

Comments are closed.