Some customers ask us – not surprisingly – how they can reduce their total cost of ownership in their information infrastructure even more. In response, I sometimes ask them what the utilization is of their storage systems.

Their answer: often something like 70% – you need of course some spare capacity for sudden application growth, so close to 100% is probably not a good idea.

If you really measure the utilization you often find other numbers. And I don’t mean the overhead of RAID, replication, spare drives, backup copies etc. because I consider these as required technology – invisible from the applications but needed for protection and so on. So the question is – of each net gigabyte of storage, how much is actually used by all applications?

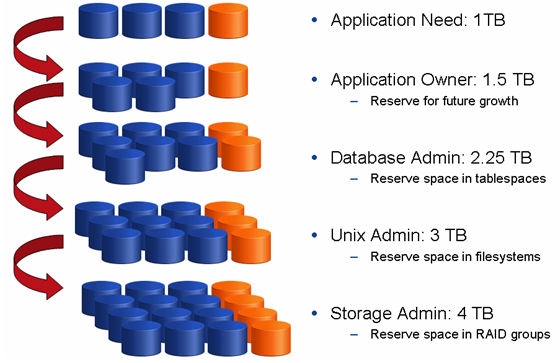

Overallocating storage

Assume a project manager hears from his application vendor that his new ERP application will consume 1 Terabyte of application storage in the first 3 years in production. So he will typically ask the database administrator for a bit more, say, 50% extra, just in case the application grows faster than expected. Project managers don’t like to get management approval for spendings after finishing their project so if he gets the extra capacity today he will not be bothered later.

So before the project even starts we are already down to at least 1 Terabyte required / 1.5 Terabyte requested = 67% utilization (best case).

The DBA really does not like to get paged in the middle of the night when a database stops due to a full tablespace. So if he gets a request for a certain database size, he will typically make tablespaces a bit bigger initially and make sure that when the database grows, he will extend them long before they run out of capacity. Let’s assume the DBA oversizes by another 50% and asks his Unix administrator for 2.25 Terabyte capacity.

We’re now down to 1 Terabyte required / 2.25 Terabyte requested = 44% utilization.

The Unix admin was advised by his leading Unix vendor that most filesystems need at least 20% free capacity to avoid fragmentation and performance problems. But to just be sure, let’s assume he adds another 30% and asks for 3 Terabyte from his storage administrator.

You got the idea – we’re now down to 33% utilization.

In turn again, the storage guy likes to keep spare capacity in the storage box – because he also wants to sleep instead of getting a phone call in the middle of the night, to configure extra capacity for the most mission critical application, just to find out that he has run out of free capacity in the storage box and needs to order extra disk (which is hard to do at midnight hour when your local EMC sales rep is snoring.. 😉

So storage admins keep pools of unused diskspace – not per application but for a whole application landscape – but let’s say this is another terabyte per 3 terabyte allocated to applications.

We are eventually down to 25% utilization. Which means the organization purchases 4 Terabyte of usable capacity and only uses one.

A welcome, but unintended and sometimes even unnoticed side effect is, that because the disks are not fully used, there will be more spindles to satisfy the performance requirements and therefore the chance of suffering from potential disk I/O bottlenecks are significantly lower – but – as I said in earlier posts, I have serious objections to throwing more spinning rust against potential performance issues.

Thin Provisioning

Thin provisioning (also known as Virtual Provisioning) – and in particular – overprovisioning – is the art of providing applications with more reported capacity than actually is available. Instead of having a one-to-one relationship of each logical block on disk to a specific application, there is a shared pool of storage that gets used as applications actually claim capacity. Every time the application (or server) writes to an area that was never written before (and therefore not really provisioned) the storage system will claim a new chunk of data somewhere in the pool and maps it to the application. As the application grows it will slowly consume more and more chunks until it has claimed all logical capacity that was provided. Because applications do not claim capacity sequential but sometimes in a bit random fashion, the chunk size is important and should be kept as low as possible – without adding too much performance overhead managing the metadata.

Large chunk sizes will result in too much capacity claimed without all chunks fully utilized (for example, only a few 8 kilobyte blocks formatted by a filesystem in an environment with 64 megabyte chunks will claim the entire 64 megabyte – not very efficient). Too small chunk sizes will cause overhead in managing meta-data. Making thin provisioning perform well with not too large chunks is another reason why modern storage systems need a lot of CPU power and large, fast internal memory. Ask your storage vendor what granularity is offered when performing virtual provisioning – as this is an important factor in estimating the efficency. As a rule: Smaller is better (unless it causes performance degradation).

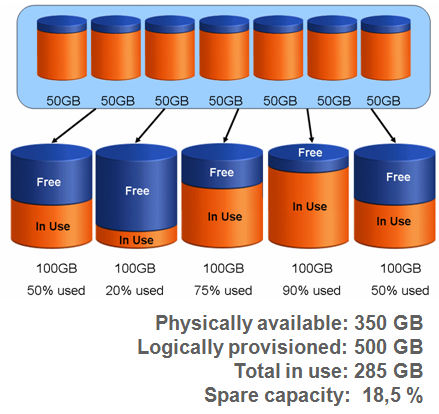

For example, given a shared storage environment of (for explanation reasons) 5 similar applications that each really allocate between 20 Gigabyte of storage and 90 Gigabyte – totalling (in this example) 285 Gigabyte.

But because administrators tend to cover themselves against sudden out-of-storage issues (as explained), we assume each application needs to get more than needed so we logically allocate 100 GB to each application. Now if we use thin provisioning and we assume an unallocated pool of about 20%, we could do with 350 GB instead of 500 GB. Each application still thinks 100 GB is available – so there is an oversubscription of capacity.

“A compromise is the art of dividing a cake in such a way that everyone believes he has the biggest piece” – Ludwig Erhard – German economist & politician (1897 – 1977)

If one application suddenly starts claiming the full 100 GB capacity, the free pool would typically have enough capacity to fulfill the need (because we have 65 GB spare capacity and the application will probably claim less than this.

(By the way if you inspect my example really close, you will see that one of the five applications could cause serious problems – just to illustrate that the reserved space in my example is too small).

The application cannot claim more than the 100GB logically provided. If the application still grows, we have to manually provision more actual or thin provisioned (thus: virtual) storage – but it would be a good idea to add capacity to the free pool ASAP because we would be really in trouble if another application would also suddenly claim all virtual capacity.

Of course, the more applications, the less likely we run into problems. For example, let’s assume 100 similar applications requiring 10 TB of actual capacity (100 GB each) and 30% spare = 13 TB of total (physically usable) capacity – where we provision each application with 300GB logically available, so the total sum of (virtual) provisioned capacity is 30 TB (and we actually only have 13 so we’re actually more than 100% overprovisioned!). Now we can happily sleep at night even if 5 applications suddenly claim all provisioned storage: we would need 10x (300 required -100 already in use) GB = 2 Terabyte, and the free pool is 3 Terabyte, no problem).

So: large, consolidated storage systems benefit from a statistical advantage.

Besides, a side effect of thin provisioning is that it distributes all data from all applications evenly across all disks in the disk pool – It removes hot spots.

The chance of suffering from performance problems is therefore reduced (some customers actually claim not having seen any disk related performance issues for a long time!). So, thin (virtual) provisioning not only over-provides capacity, but also over-provides performance!

And admins who do their homework during the day, will not get calls anymore at night just because there is a runaway application that suddenly runs out of capacity (as long as you make sure there is enough spare capacity in the pool available for all applications).

Currently there are some gotcha’s using this strategy – mostly having to do with applications deleting data (i.e. a user deleting lots of documents from a file share) or doing problematic stuff (such as periodic file system defragmentation). As the data is not actually deleted (the file structures are still on disk) the capacity cannot easily be handed back to the free pool. Technologies are becoming available to deal with such issues. Look for tools labeled as “zero space reclamation utilities” (there is one for Oracle ASM, for example, to reclaim unused ASM blocks after disk rebalancing). Or just write large files to the file system containing nothing but zeroes – the storage will see large areas of zeroes-only and will reclaim this capacity.

Bringing it all together, you can imagine a storage system that offers everything described: a thin provisioned pool constructed from Enterprise Flash drives as well as classic Fibre Channel and low-cost, large capacity SATA drives; with an automated load distributor that detects hot spots and moves the corresponding blocks to flash, and the hardly used blocks to SATA, and back if necessary (a technology that actually works like this is EMC FAST-VP, more on this in a future post).

Research is also being done on online de-duplication (i.e. finding multiple pieces of disk that contain exactly the same data) which should drive down capacity requirements even more (but I’m a bit skeptical to see this work well with relational databases – plain file serving would be a much better sweet spot).

Who said data storage had become commodity?

![]()