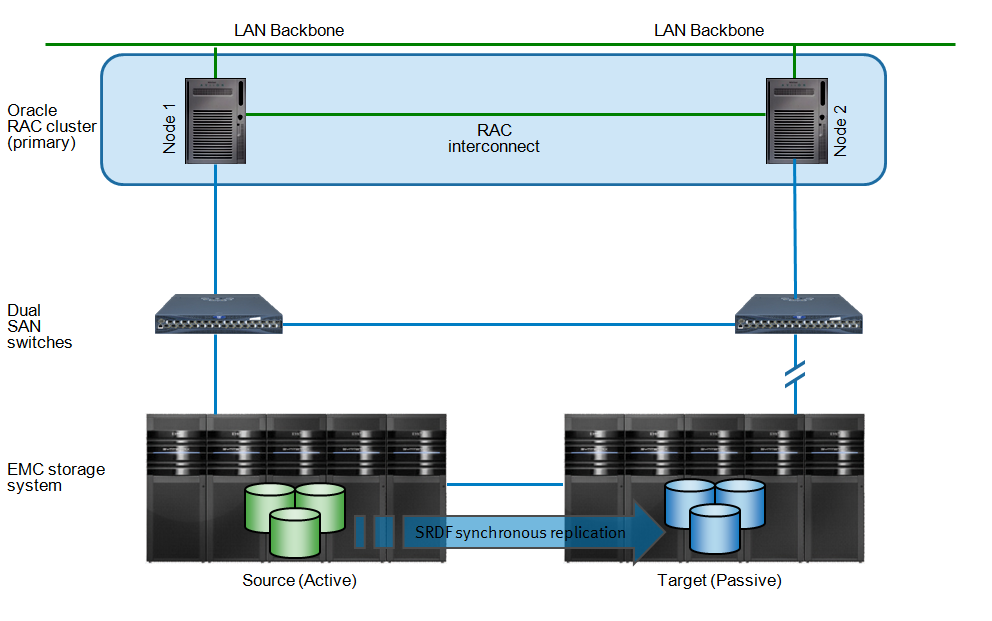

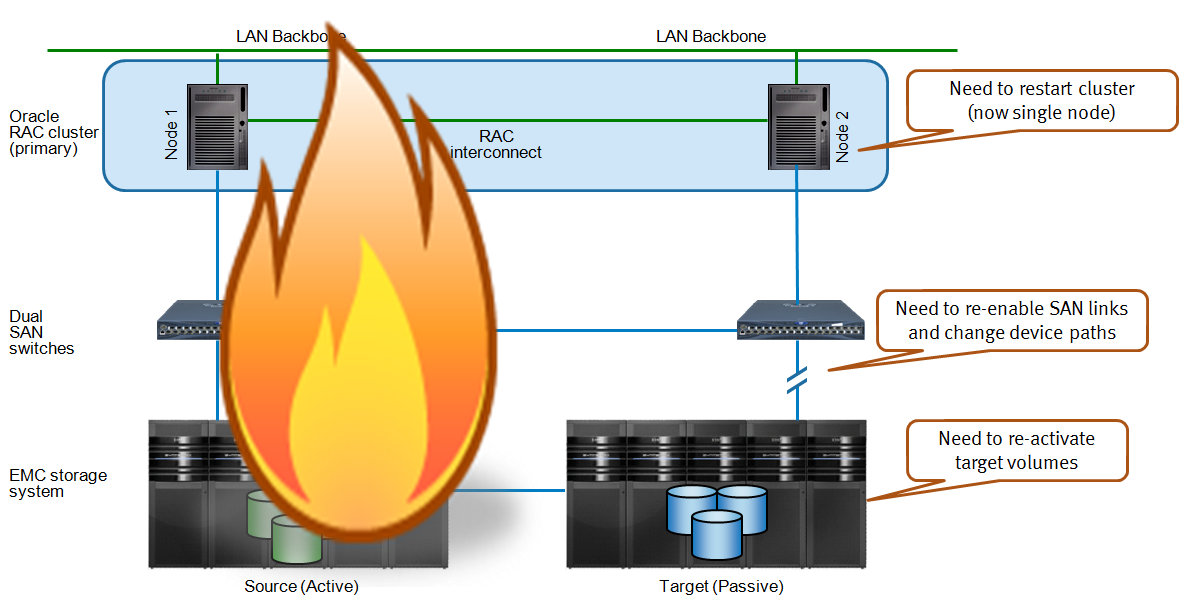

For data mirroring, EMC SRDF is sometimes used in such a setup that both servers write to one location only (the “far” server writes across dark fibre links to the local storage). EMC has similar tools (Mirrorview, Recoverpoint, etc) for other storage platforms than Symmetrix.

This, as said, has the disadvantage that if the active storage system (or the whole site) goes down, the cluster still goes down and has to be manually restarted from the failover site.

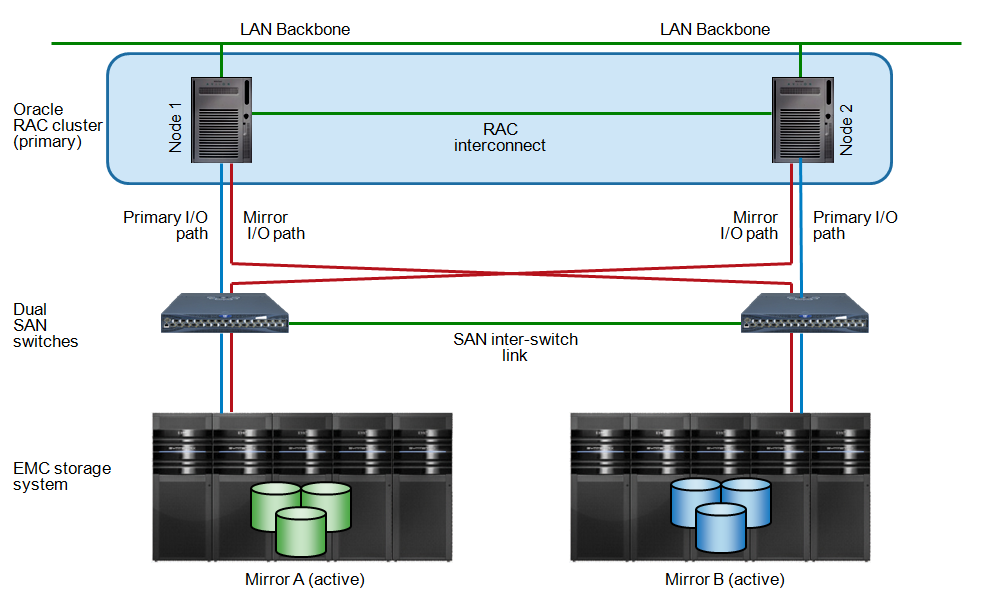

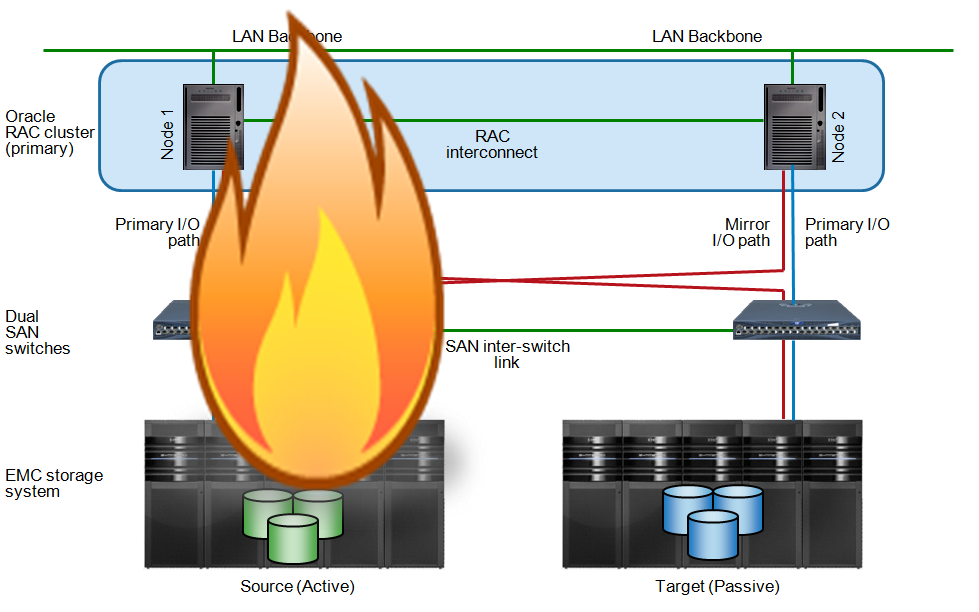

Host Based Mirroring

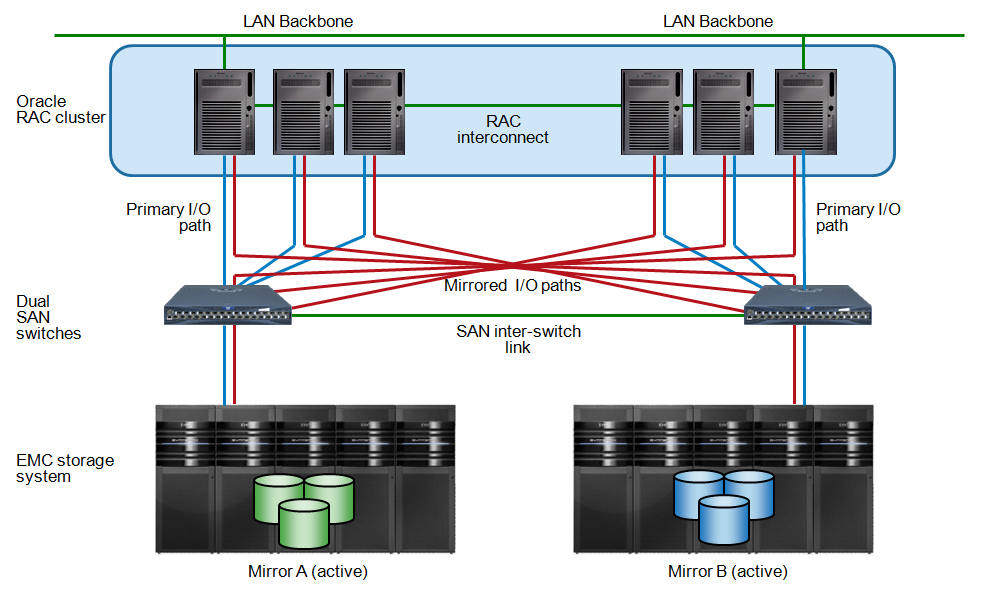

A seemingly attractive alternative exists for replicating storage across multiple datacenters: Host Based Mirroring. The concept is simple: you use a component already available in most Unix operating systems, a volume manager, to create mirrored volumes and then make sure that the mirrored data copies are physically in a different storage system – and in a different physical location. As in an Oracle RAC cluster all server nodes are actually reading and writing against the same data set, the volume mirroring has to be “cluster aware” and the same goes for the filesystems (if used).

As Oracle Automatic Storage Management (ASM) fulfills all of these requirements, many customers choose to move away from Unix style volume managers and use Oracle ASM instead. ASM is then configured in “normal redundancy” mode, which means that each ASM chunk of data is mirrored across two different disks (and as explained, these disks are each in different storage boxes and physical locations). As ASM is free to use for Oracle customers, it seemingly makes sense to use ASM as a disaster recovery replication tool – thereby replacing storage based mirroring tools such as EMC SRDF, Mirrorview, or Recoverpoint.

The idea is that if a site failure happens, the cluster keeps running without recovery as storage on both locations was active anyway.

I also spoke to some customers who prefer not to use ASM and rely on a Unix (or Linux) based volume manager and filesystem (as said, both have to be cluster-aware).

Although host based mirroring can be made to work, there are some caveats.

License cost

For some volume managers or filesystems a license fee is required. As Oracle ASM is free and less complex to setup, this is what most users opt for, but exceptions exist. Some customers just don’t like ASM for some reason and choose a different method.

CPU overhead

Oracle database licenses are expensive and often based on the number of CPU cores in a server. The cost of the database licenses typically outweigh the cost of the server, storage, operating system and other infrastructure components. Therefore, to use CPU’s that carry Oracle licensing for anything else than pure database processing is, in my opinion, a terrible waste of money.

The CPU overhead for mirroring all write I/O’s across locations is typically 1-2%. Maybe not that much percentage wise, but in contrast to the high license cost you might still wonder if it’s is a good idea to spend CPU cycles on data mirroring.

I/O overhead

Servers have limitations in how many I/O’s and/or megabytes per second they can handle (based on backplane, host bus adapters, operating system and so on). Using some of the precious I/O resources just to copy writes and send them out (again) to another storage box limits the maximum I/O levels a bit. It does not have to be a huge issue but it is something you have to be aware of.

Complex setup

The promise of ASM mirroring is simple, however, to implement it correctly in conjunction with the database clustering, making sure data is really mirrored to different storage systems versus in the same storage box, tuning remote I/O links etc. makes building a stretched cluster with Oracle ASM a non-trivial task. Even a tiny configuration error could mean the cluster will not keep running after a failure, or even worse – you introduce data corruption or split brain issues that only will be detected after experiencing some kind of disaster. And at that point, we have more important things to be concerned about than to figure out why the cluster stops working or gets corrupted.

If you start investigating all things you should keep in mind when deploying an ASM mirroring based stretched cluster, you will find dozens of subtle configuration settings, timeout values, and known issues with (or without) workarounds that you have to comply with – such as the delicacies of Oracle clusterware setup. You might find that the cluster will be less high-available in real life than anticipated based on marketing promises.

Performance issues accessing remote data

If the same data is available to read from two physical paths, either the database pseudo-randomly picks a path (which could be the path with the most overhead so the database reads from the remote storage array) or you have to carefully set the preferred read path. Errors in this configuration can cause I/O bottlenecks and performance problems. Even with the preferred path set correctly, if connection to the local storage fails, the alternate path will still be used and this can have unpredictable effects for performance (not the least because probably more than one cluster suffers from the same failure and suddenly dozens of servers start using remote links).

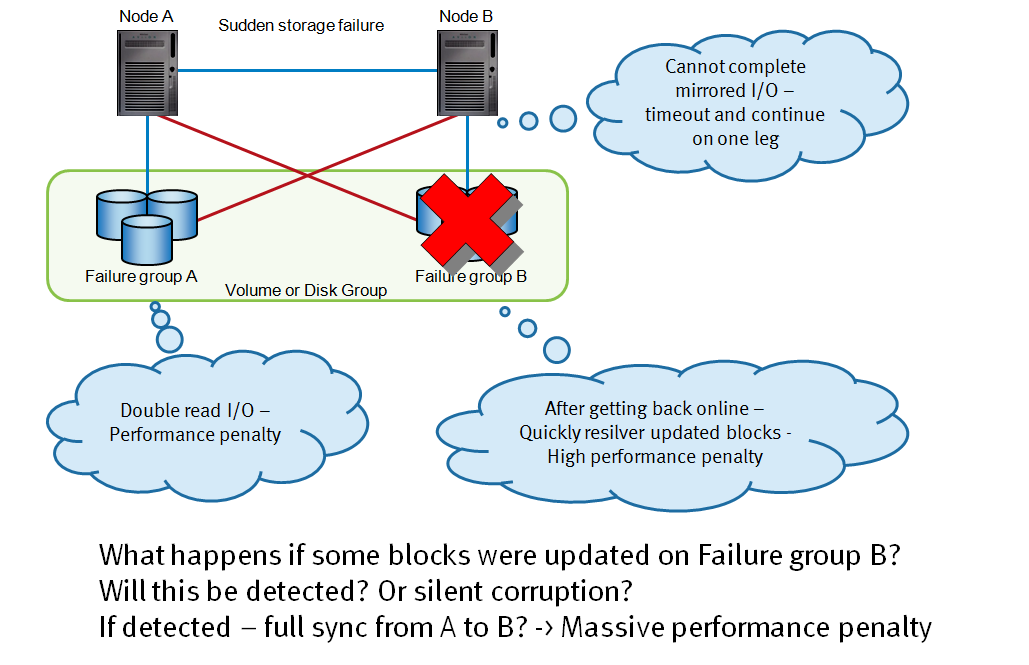

Incremental versus full data re-sync

If the connection to one of the storage systems fails, typically you don’t want the cluster to go down, so most clusters are configured to resume processing after a short timeout. As the updates no longer get written to both storage systems, the data has to be re-synchronized when the failed connection is restored. In older versions of ASM (and in most Unix volume managers today) this means a full re-sync has to be performed across the remote data connection. For large data sets this can take a long time (during which you are unprotected against new failures), and the extra I/O processing can cause severe performance impact for normal database transactions.

Oracle partly solved this problem in version 11g, by keeping a list of updated blocks to be re-synchronized when the links are restored. One requirement for this to work, is that the storage system that lost it’s connections has not received any write I/O’s in the meantime. If, for some reason, the state of the data in that storage system was not completely frozen, you risk either having to do a full re-sync anyway, or, much worse, a silent data corruption.

Need to set up for each cluster individually

If you only have one cluster that requires remote replication, then you deploy the host mirroring solution once and you’re all set. Remark that you probably would like to have a second, similar setup for testing purposes. Most of my customers however, have many databases and applications for which they require remote replication (as a foundation for disaster recovery and/or high availability). So for each separate cluster you need to re-install and configure the required components, and make sure they work as expected in case an outage happens. The more clusters, the higher the risk of silent configuration mistakes (who will reveal themselves only when you really don’t have the time to resolve).

Need to design this solution for each different mirroring tool

Sure, if you’re a database administrator, only managing Oracle databases, and you have a bunch of Oracle databases that require High Availability or Disaster Recovery, then you just copy your best practice configuration to many individual clusters (and maintain them if you get new versions, patches, etc). In real life, I know many customers who have more than one database platform (such as UDB/DB2, MySQL, SQL-Server, PostgreSQL and others).

They run on different platforms (Linux, various Windows versions, a few UNIX flavours), use different volume managers and filesystems, etc. You would have to design, test and implement a mirroring solution for each individual database stack – and consider non-database data as well. Complexity and risk increases the more different stacks you have to manage and the more instances of each stack need to be deployed.

Static dual site configuration only

How do you migrate one of the datacenters to a new location? Do you need to shut down one half of the cluster? How hard is it to set up a new node in the cluster, either on one of the two locations or on a new, third active site?

In the above picture you can see that when sizing up to a 3+3 node cluster (not very unrealistic if you need at least 3 nodes to run a workload even in disaster scenarios) you will have six active nodes, each mirroring their data to two storage systems, so you have twelve active read I/O paths and six cluster nodes that have to keep each others data consistent.

These things will be very hard, if not impossible, to perform with a standard dual-site host mirroring configuration.

Disaster recovery testing

One very important aspect of disaster recovery is testing. You want to feel comfortable that if hell breaks lose, you can trust the fail-over mechanisms and know for sure that you are able to restart or continue processing without data loss or long outages due to recovery issues. To make sure everything works, I advise my customers to perform frequent disaster recovery testing. For example – by simulating failure scenarios such as shutting down servers (using the power button), pulling cables, simulating disk failures, and so on. In an active/active cluster, people are often reluctant to force failures as this could bring down the whole cluster if it is not properly configured (but isn’t this what we want to test in the first place? So we have the classic chicken and egg problem). To add to that, disconnecting one of the storage systems from the configuration immediately causes performance issues, and even more degradation when the connection is restored again (due to re-sync). In the meantime, you are not allowed to modify data on the disconnected storage box, as this would disable incremental re-silvering of production data, making problems even worse. As a result, hardly any user will test his cluster after going in production. Configuration problems could silently be introduced and sit quiet for years, until something happens and the shit hits the fan.

When did you perform a full-scale disaster recovery test in your landscape by the way?

Integration with storage tooling

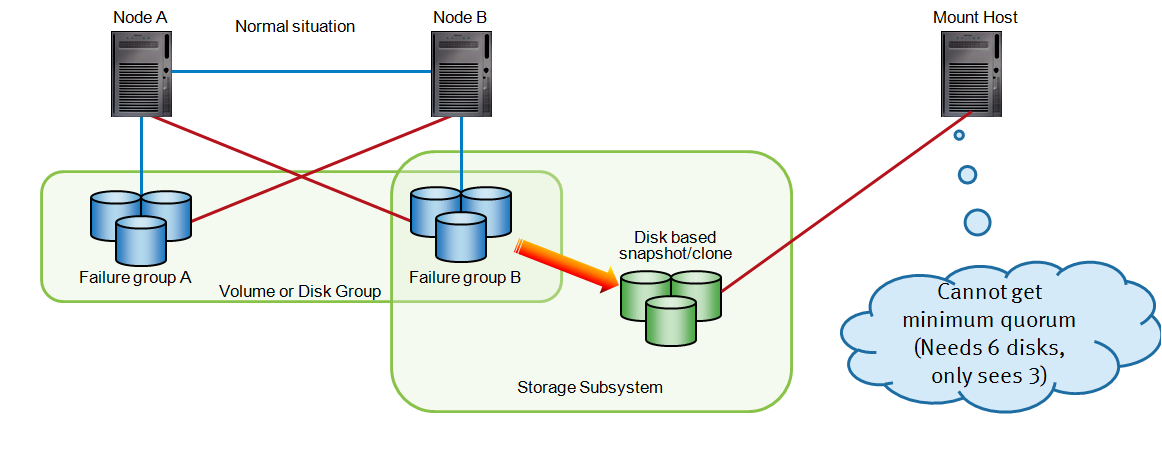

Working for EMC, I don’t like having to point to limitations in our capabilities. But I have seen a few customers who had deployed various host based mirroring solutions and ran into problems with EMC’s replication tools. To be precise, some of our tools cannot (out of the box) handle creation of snapshots across multiple storage systems (that said, I doubt if our competitor’s tool sets can do better).

The problem might not be so obvious at first but I’ll try to explain. Assume you use a volume manager (ASM or a Unix LVM) to mirror data across two sites, using two EMC storage systems. Now you use an automated tool like EMC Replication Manager, or EMC Networker Powersnap, to configure and manage application (i.e. database) clones (for purposes of backup, testing, etc). The replication tool queries the host what volumes to copy and it gets a list with volumes that physically reside on two separate storage systems (i.e. in ASM this would be two different failure groups). To make a consistent snap, you need a feature that allows snapshot across volumes from different storage boxes that logically look like they were cloned at exactly the same time. EMC has a feature called “consistency groups” and “multi-session consistency” to allow this. But the management tools do not integrate very well (as these multi-session consistency are not used by many customers).

The alternative would be to (force-) mount a partial storage group (in ASM you would mount from a single failure group and ignore the missing disks). It can be made to work, but with a lot of workarounds, hacks and custom scripts. Probably you cannot use the application snapshot managers, that were invented to get rid of complex scripting in the first place. Manual scripting results in more risk, cost and complexity.

Block corruptions due to partial mirrored writes or updates to the offline disks

Care should be taken that if one of the two storage systems becomes unavailable (for whatever reason), that there are absolutely no changes to the data on that box. Updates to the disconnected system can happen due to, for example:

- Flushing of cache of any component in the I/O path. Normally this should not happen but if you have exotic components such as virtualization controllers that cache writes, then you might be in trouble. Also, wrong filesystem mount options or software / firmware bugs could cause serious problems

- A server node that should be down but keeps running and sending I/O against the storage system. Due to cluster software bugs, wrong voting disk configurations, etc

- Rolling disasters that cause multiple failures in a row, at different timestamps

- Flaky I/O multipathing software (i.e. those who do not respond correctly to intermittent cable failures)

- Operator errors – i.e. trying to force-restart a disconnected node while the others are still running production

- Operator intervention (related to error but here we have an operator actually trying to get things right). Assume site B went down, then site A goes down as well. The operator tries to restart and chooses site B. If this succeeds he has production running against an older dataset than the state of site A (and you probably lose some business transactions although technically this is not a problem)

Another potential problem is caused by what happens to incomplete mirrored writes.

Assume database node A wants to write an update to a datafile and the ASM mirror policy causes the server to write two identical updates to both storage boxes. The time it takes to complete an I/O is not fixed and depends on lots of factors such as I/O queueing, path distances and delays, storage processor load, state of the storage cache and much more. In some cases, the local storage system will be the first to complete the I/O, in other cases, the remote storage system will be faster. As Oracle database is a complex system with a lot of parallel I/O going on, at any moment in time on a heavily loaded system, there will be I/O’s already completed locally but not yet on the remote, and vice versa.

If, in that situation, connectivity is lost to one of the storage systems, then the disconnected system enters a state where some more recent writes have completed where older writes haven’t been written yet. Your first reaction would probably be that the Oracle redo logging mechanism takes care of such inconsistencies (the “ACID” principle). To date, I haven’t been able to get this explained by either Oracle people or other experts. One of the complicating factors is that redo logs are mirrored (and therefore suffer from partial writes as well).

I have heard during a specific customer discussion, that they experienced these kinds of problems with Unix volume managers but we could not find the root cause. I think it was due to the complexity of the stack (clustered volume manager with mirroring, cluster-aware filesystem, Oracle database) where each of these components has a journal (Oracle calls this redo log) and my take is that one of these components implemented the I/O mirroring or the journalling incorrectly. They asked the vendor of the OS to explain in detail but after a few deep dive questions from me and my peers at the customer, some questions stayed unanswered. Result was that they dropped the implenentation project and opted for a better solution.

That said, I do truly believe that a correctly implemented Oracle RAC cluster, with only ASM as the volume manager, should not experience such subtle corruptions. This is more due to the very robust mechanism of Oracle’s consistency checking and redo logging and has, in principle, nothing to do with host based mirroring as a replication tool – as this happens at least one level lower in the I/O stack. Still I have some unanswered questions on this – I appreciate it if anybody can point me to the right resources.

All host based mirroring tools that I am aware of, whether based on the host OS or third party add-ons, were originally invented as software RAID implementations to compensate for disk failures (rotating rust with moving mechanical arms is notoriously unreliable in comparison with the other components in computing).

People have started to use these disk redundancy tools as disaster recovery solutions, and some vendors added extra features to support this. In basics, I personally don’t believe in this approach and I think you should separate disaster recovery replication from the host application stack, for reasons mentioned.

What my preferred method is should be easy to guess (I work at EMC for a reason) but I will explain these solutions in future posts.

![]()

Comments are closed.