In my previous posts I described how Oracle ASM can be used to build stretched clusters. I also pointed to some limitations of that scenario. But I am by far not the first one in doing so – and some of EMC’s competitors attempted to build products, features and solutions to overcome some of the limitations in host mirroring.

A while ago, some guys I met from an EMC partner, confronted me with the question why EMC, the market leader in external storage and premium Oracle technology partner, had not offered a solution for these limitations. They pointed to a number of products from competitors that – allegedly – solved the problem already. Also they pointed to the architectural simplicity of these solutions.

At that time I had no good answer (which does not happen to me very often). I was not aware of how these products worked and I asked some questions on that. In that period I was also confronted by our enterprise customers who started demanding an EMC solution for stretched clustering – so I started digging. Could it be that EMC was over-passed by some of these alien storage start-up companies in continuous available storage solutions? It seemed to be the case.

Until I digged a bit deeper – and found that EMC had not fallen into the same trap as some other vendors did.

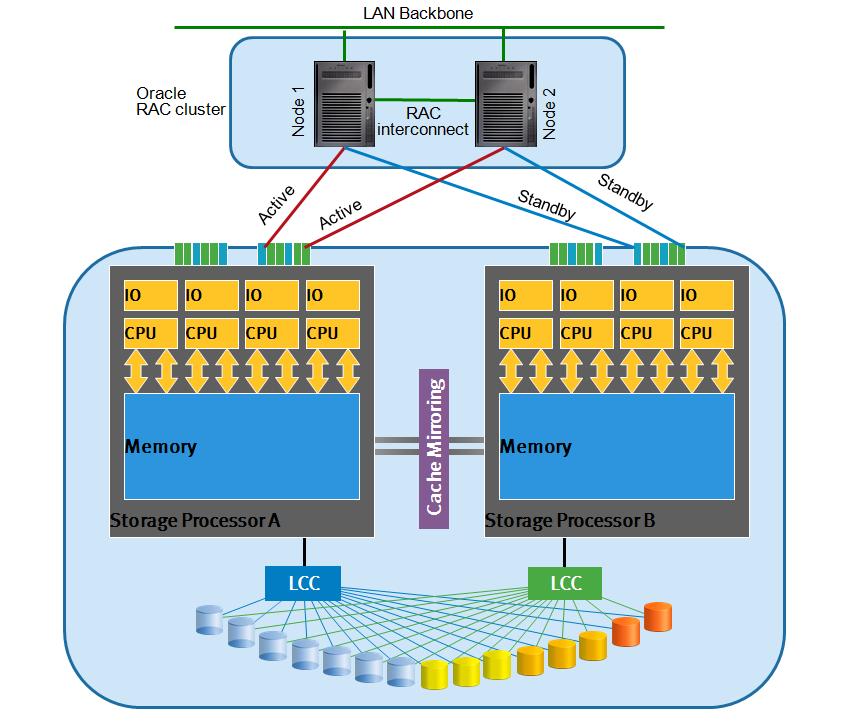

I came across a few different architectures to provide “stretched storage”. To explain, I need to show the architecture of a mid-range storage system first. Most modern architectures, including EMC CLARiiON / VNX, look like this.

These systems typically have dual storage processors, each with cache memory and each with connections to all disks (disks are dual-ported). In case a storage processor goes down, it would lose it’s cache contents and this could result in serious data corruption. Therefore modern mid-range architectures mirror (at least) the write cache to the other system. Now if a node goes down, the dirty cache slots are flushed out by the surviving node. Technically, from that moment on, the surviving processor may no longer keep written data in cache, because if it would go down as well, you would still have data corruption. EMC goes a bit further by the way, by keeping the mirrored cache alive even if the owning storage processor has died. I believe some storage vendors, who prefer getting good performance benchmark results over keeping customers’ data consistent, allow write caching even if cache mirroring no longer works. This, in my opinion, is pretty dangerous, but probably nobody will notice because Murphy is asleep anyway and the chance of the second processor going down is astronomically low (yeah right).

In any case, each volume on the system is “owned” at any moment by one of the two storage processors. If the owning processor dies, the other one has to take over the access to its disks and provide the I/O access to the application host. The logical moving of a LUN from storage processor A to B is what EMC calls “trespassing”. Depending on the storage access protocol (iSCSI, Fibre Channel, NFS, etc) you need to configure for this or not. If the front-end, for example, talks iSCSI, all the surviving processor has to do is to move the MAC address, IP address and the connection “state”. The IP routers will take care of the rest and the host will (hopefully) never notice (except for a slight delay in I/O probably). For Fibre Channel it’s a bit more complicated – you need to have host awareness by using I/O multi-pathing software (such as EMC’s Powerpath) to allow for trespassing to happen successfully.

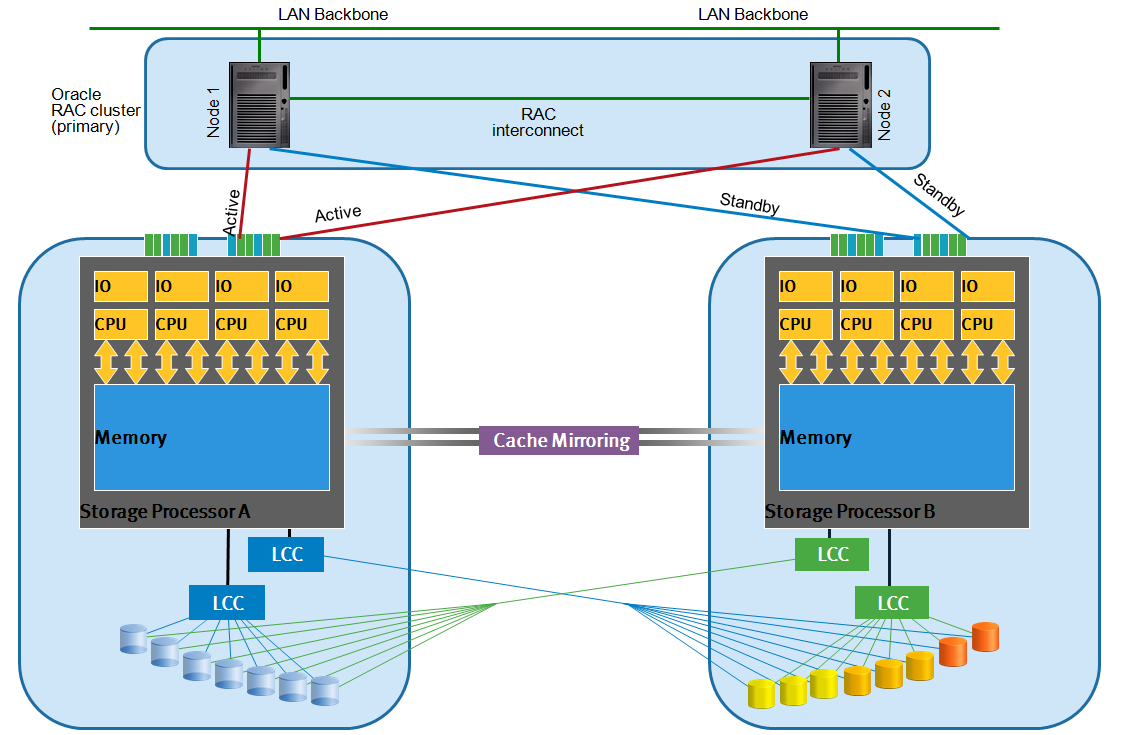

If you want to build a “stretched” storage cluster, the obvious way would be to just put the storage processors at a distance, in separate locations.

Typically the distance cannot be much further than a few hundred meters, but it’s a start. Now if one storage node fails in one data center, the surviving node takes over the access to the others disks, and as explained, the associated MAC address, iSCSI target ID, NFS export, LUN id, etc. The trespassing (regardless of what storage protocol you use) would reroute storage I/O to the surviving node and you can continue processing. Problem solved.

Or…?

First of all, you would have to mirror all data because if the complete storage system fails (not just the processor) the data would be completely unavailable. Mirroring across distance can have serious performance impacts, but that’s unavoidable and you can solve it (theoretically) by adding resources (bandwidth, CPU, I/O).

Second, these types of clusters are really active/passive. Every LUN is owned by one storage processor, and are not available for read/write across two storage processors simultaneously. The confusion comes from marketing people who claim the systems are active/active because each storage node is processing I/O (just not against the same volumes). So a stretched Oracle RAC cluster would have one node performing I/O against the local node (fast) and the other one is performing all I/O against the remote node (slow). That’s not what I call true scale-out – and this solution is only marginally better in terms of availability than what EMC has done for over 15 years using storage mirroring with EMC SRDF, Mirrorview and the like. The only perceived advantage is seamless fail-over, but this does not really exist either, as I will explain.

The real big question is, how does the surviving node know whether it’s partner has died, or still active but just lost communications? In other words, how do you resolve split brain issues and protect from data corruption?

The various documentation from vendors told me the answer: they don’t. They will either have a primary node that will keep running if the secondary fails (but not vice-versa) or stop completely. Up till now, most vendors cannot offer continuous availability in all possible failure scenarios.

A few exceptions exist; some implementations use quorum disks within their storage cluster. Now if you would have (at minimum) three quorum disks across three storage nodes, each in a separate location, then it could work – at least from an automated fail-over perspective. Still it does not solve the problem with active read/write I/O against the same volume in all locations, and there are some other caveats.

So let’s try to make a list of requirements that a storage solution for active/active stretched clustering should meet.

Storage Requirements for a true stretched RAC cluster

Must have (to make it working and enterprise-grade, the bare necessities):

- As simple as possible – but not simpler

This disqualifies a lot of solutions because they are either way too complex (and therefore increase risk) or too simple (and therefore respond different to failures than expected).

- Keeps running, without failover or recovery, in all possible failure scenarios (including rolling disasters)

This disqualifies all solutions that don’t have a tie-breaker, voting, or witness component in a 3rd location.

- Still provides redundancy on the surviving location after a site failure

This disqualifies all implementations that just pull storage servers apart. In case of a site failure followed by a component failure in the surviving processor (i.e. CPU) you would still go down.

- Is protected against split brain issues

Disqualifies all solutions that don’t immediately abort processing on all but one node if all communications fail.

- Is protected against silent (or screaming) data corruptions

Disqualifies systems that play poker with your data, i.e. by not mirroring write cache in case of node failures, not protecting write cache, or by messing with the order of writes between local and remote storage.

- Keeps performance impact to a minimum

Disqualifies all implementations that require the remote node to talk to the local storage (because the remote system is passive)

- Is capable of recovering to normal mode of operation without serious impact on service levels

Disqualifies all solutions that require full re-sync, require massive host I/O to resilver disks, or cannot prioritize synchronisation I/O from primary host I/O.

- Allows failure testing without downtime

Disqualifies all architectures that cannot “split” the two locations, keep the primary running production, let the secondary perform failover testing (destructively), and then re-sync the two sites without serious performance problems.

Additional (optional but still very important) requirements:

- Does not restrict existing functionality (such as snapshots, backups, etc)

This includes any implementation that prevents storage snapshots, backup integration, or requires complex multi-session consistency to make it work, especially host based volume managers that create volume or disk groups out of both local and remote storage boxes.

- Allows failure testing without compromising continuous availability

Ideally failover testing should be done on a copy of production, so that storage mirroring/replication stays up and you can test failover without breaking the link. This allows failover testing during weekdays instead of carefully planned weekends (that will rarely happen).

- Is a generic solution for multiple operating systems, databases and/or applications

Should support not just Oracle but many other platforms, and should not be completely dependent on one operating system, database or other point solution.

- Allows for business application landscape consistency

As businesses move into Service Oriented Architectures and connect multiple systems together creating one business application landscape, it should be possible to fail-over multiple systems as a whole (being consistent on the transaction level).

If you really consider an Oracle active/active solution, I recommend you carefully consider the requirements and ask your solution vendor if they can meet these requirements.

And don’t just believe them, ask for references and cross-check the information carefully. You’re going to spend lots of money (Dirty Cache) on a continuous availability solution, you might just as well be familiar with all details and be sure it works if you most need it.

![]()