Last year, EMC announced a new virtualization product called VPLEX. VPLEX allows logical storage volumes to be accessible from multiple locations. It boldly goes beyond existing storage virtualisation solutions (including those from EMC) in that it is not just a storage virtualisation cluster – but merely a storage federation platform, allowing one virtualized storage volume to be dynamically accessible from multiple locations, as if they were connected through a wormhole, and being built from one or more physical storage volumes.

Instead of using classic clustering and replication methods to replicate and provide access to data over distance and protect from component failures, it uses cache coherence between multiple nodes – where the nodes are more or less stateless and you can dynamically add or drop nodes, regardless of the location (currently only two-cluster configurations are supported). In a way, it is comparable to Oracle RAC where nodes are aware of each other’s cache and session information.

The main purpose of VPLEX is data mobility for virtual (cloud) environments. However, I thought, when VPLEX was only in an early stage of development (after EMC had aquired YottaYotta which is the foundation for this technology), that it could also be the answer to the classic storage mirroring limitations, in case customers wanted to build disaster-tolerant Oracle RAC (stretched) clusters. When asking around, I found that some of my colleagues who are dealing with Oracle solutions as well, also got requests from customers for supporting true stretched Oracle RAC clusters. So I started discussions with various EMC product engineers to add a special feature to make this truely work for Oracle. In the end, basically the only feature VPLEX was missing, was the “VPLEX witness”, another word for tie-breaker or arbitrator in other cluster solutions (as discussed in my previous posts). The witness provides continuous availability in case one of the VPLEX clusters would be unavailable for some reason (power fail, connectivity loss, datacenter problems and so on).

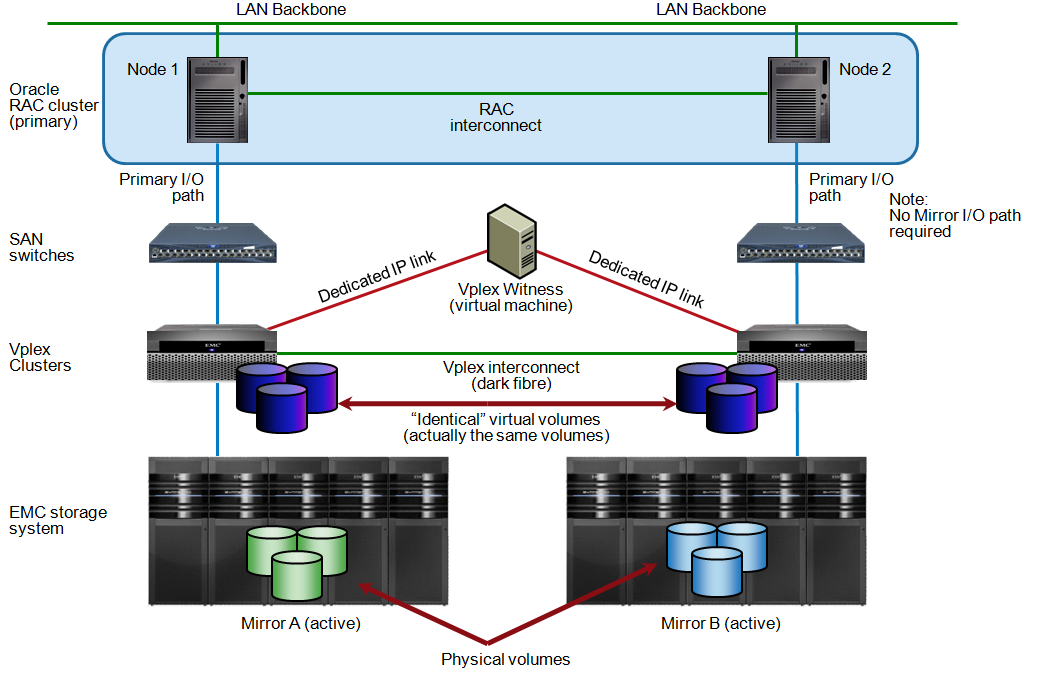

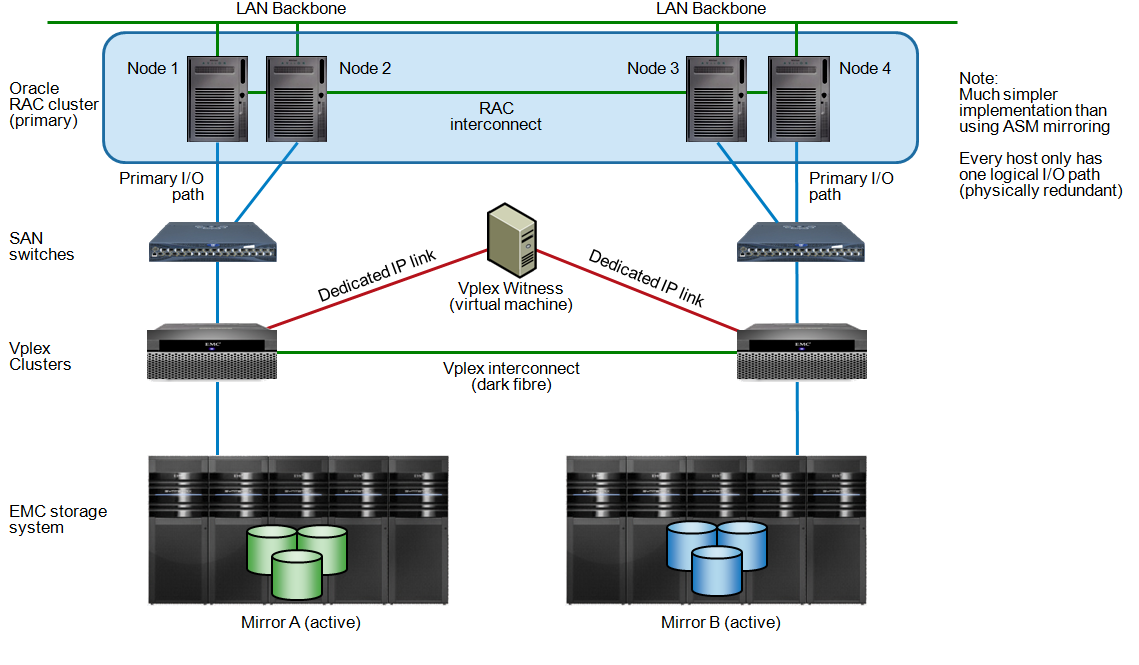

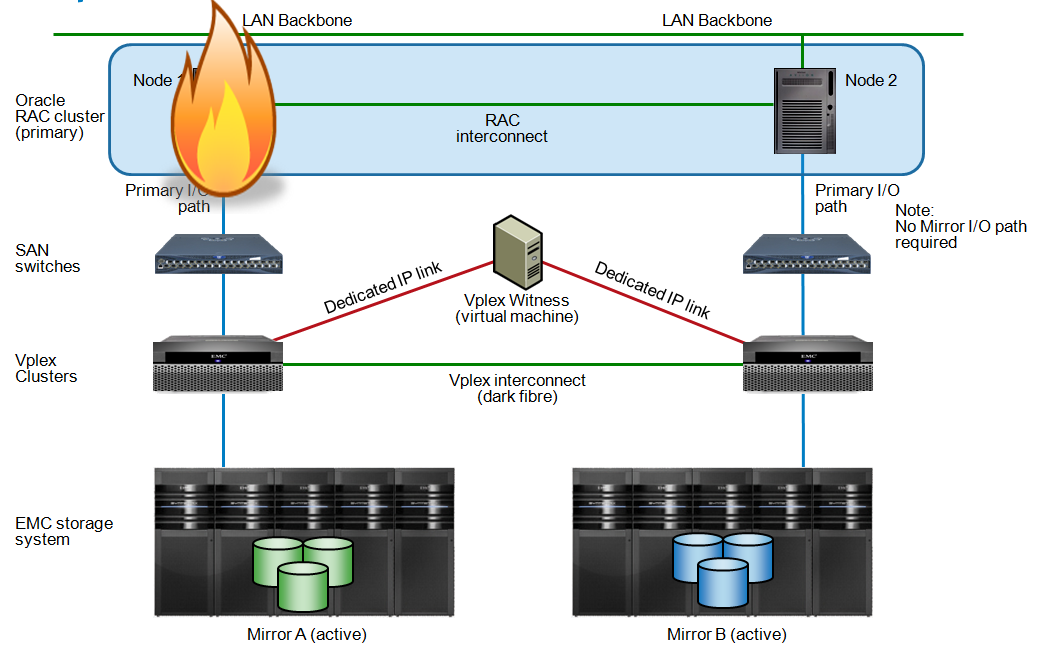

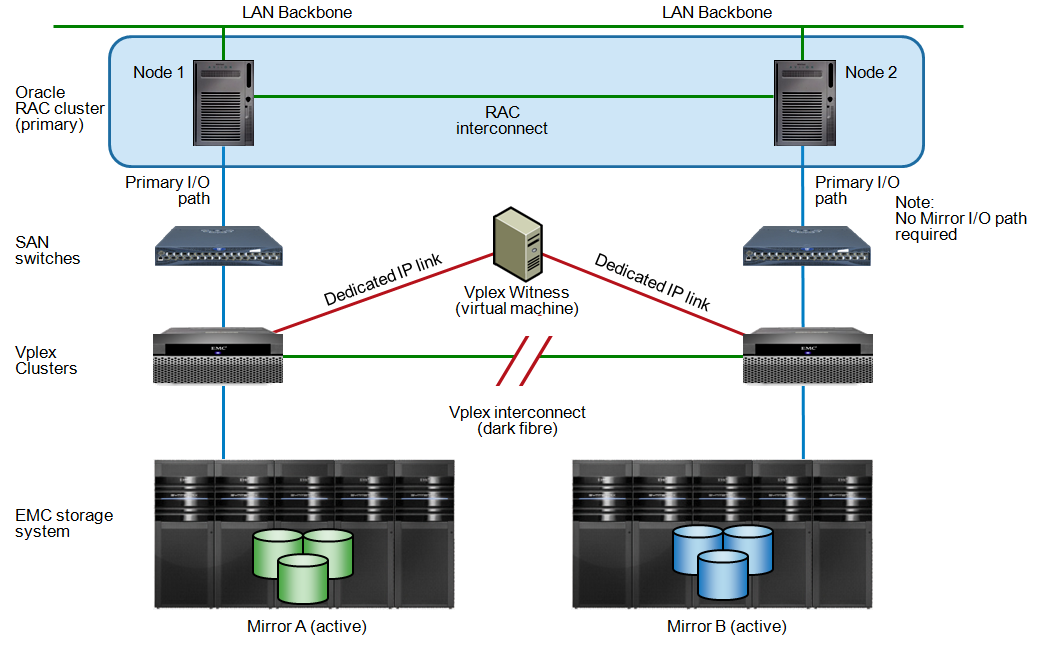

The end result is that we can now build an Oracle stretched RAC cluster (also known as Extended RAC, Geo-RAC etc), where no special mirroring setup is required with Oracle ASM or any other volume manager or host based replication technology. You can setup the geographically dispersed cluster much like you would configure any local cluster – it has no clue that the storage is actually federated over distance. For Oracle insiders: you would use ASM “external redundancy” (no mirroring) policy to make it work.

So how does it work?

Describing how VPLEX works in detail is beyond the scope of this blog post. But here is a short intro – refer to EMC documentation for more details.

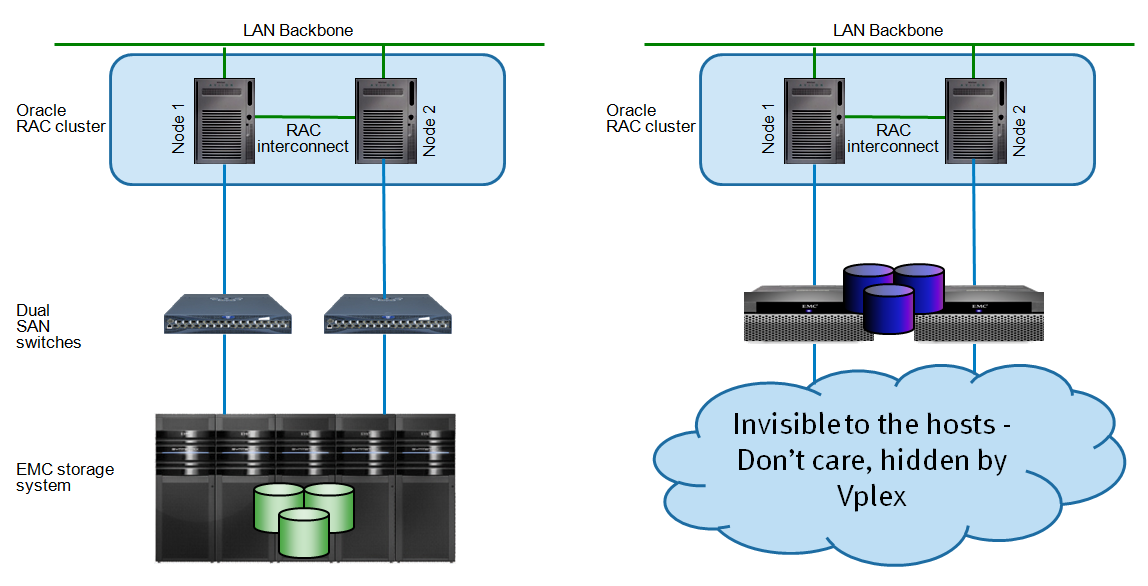

VPLEX is a storage virtualization layer in between actual storage and servers and acts as a storage federation platform. Applications don’t need to know anymore where storage physically is located, how it is configured or replicated, and it does not matter anymore from where (i.e. which host, location, adapter etc) the storage is accessed.

It is dynamic (you can add, change, move or remove things like storage boxes, VPLEX cluster nodes, host access etc. on the fly), virtual (no hardware dependencies), scalable and performant. VPLEX will provide the same identity to storage volumes (i.e. SCSI LUN id’s and so on) so the volumes will look exactly like the same volume presented at the other location.

For clustered applications like Oracle RAC, it is important to understand that VPLEX offers distributed access to a storage volume (logical disk) as if it was only one – no matter through which connection (HBA, channel, cluster node, etc) you are accessing it. (actually, it is the same one, just represented by different cluster nodes – much like an Oracle RAC database is actually one database but represented on different database nodes). So in a twin datacenter setup, each with a VPLEX cluster, a storage system and an Oracle database cluster node, every Oracle node would see the virtual, stretched storage volume as if it was not even replicated. You would configure Oracle as if it was using just one storage system. No messing around with mirroring, setting read path priorities, failure groups and all that stuff – as far as the database is concerned, there is only one set of storage volumes (and in a way, logically speaking, this is true).

Because every database node is physically only connected to the local VPLEX cluster, it will always read from where the data is closest to the server. VPLEX will automatically read from the local storage where possible and even do caching (read-only) to boost performance a bit (although this is a side effect and not the primary reason for implementing VPLEX).

Adding nodes is relatively simple – almost like adding nodes to a local cluster with one storage box.

Failure scenarios

To understand failure scenarios with VPLEX, it is important to understand that you would configure an Oracle voting disk on VPLEX. You might argue that you’re now silently mirroring the voting disk, as I pointed out in “Stretched Clustering Basics”, which would result in problems with split brain issues. Normally this would be the case but here, VPLEX resolves the split brain before Oracle does.

Host failure

RAC node failures would be handled exactly like in a local cluster – the remaining node(s) will keep running and connections to the failed node will be re-initiated to the remaining node(s). The surviving node(s) will recover the failed node’s transactions, etc. The split brain situation is resolved by the voting disk.

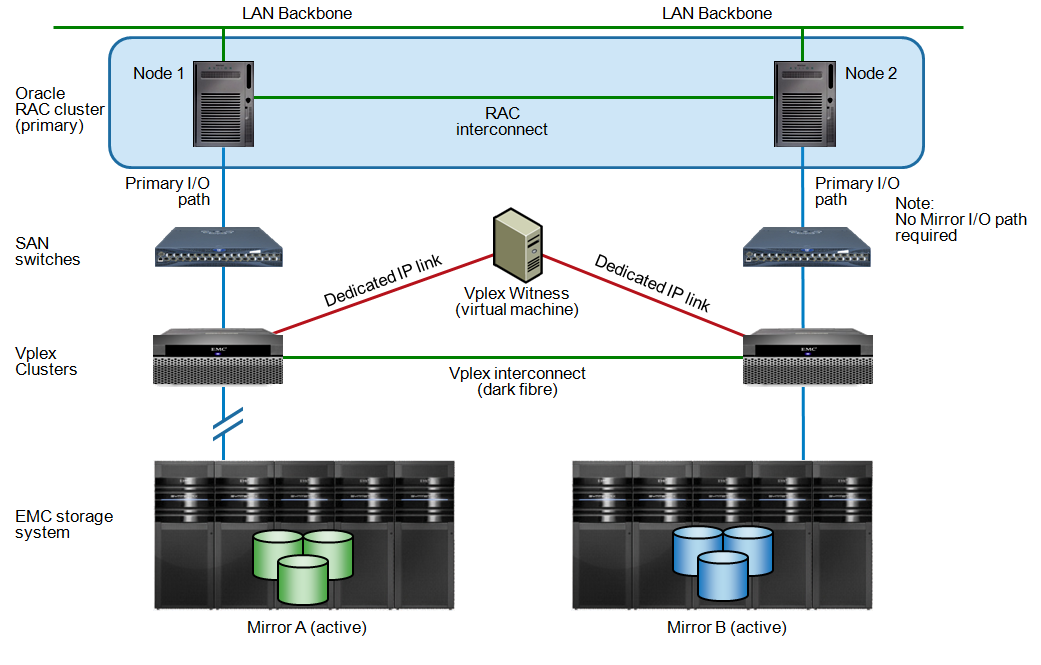

Storage failure

If one storage system would become unavailable, the database cluster would still read and write to the local VPLEX cluster and cache reads locally as much as possible – only serving read I/O from the remote storage system where needed. Writes will be forwarded to the remote VPLEX cluster (and written to disk directly) so data integrity is preserved in all cases. There would be a performance penalty, but probably much less severe than with a host based mirroring solution where all I/O’s would have to be served across remote links.

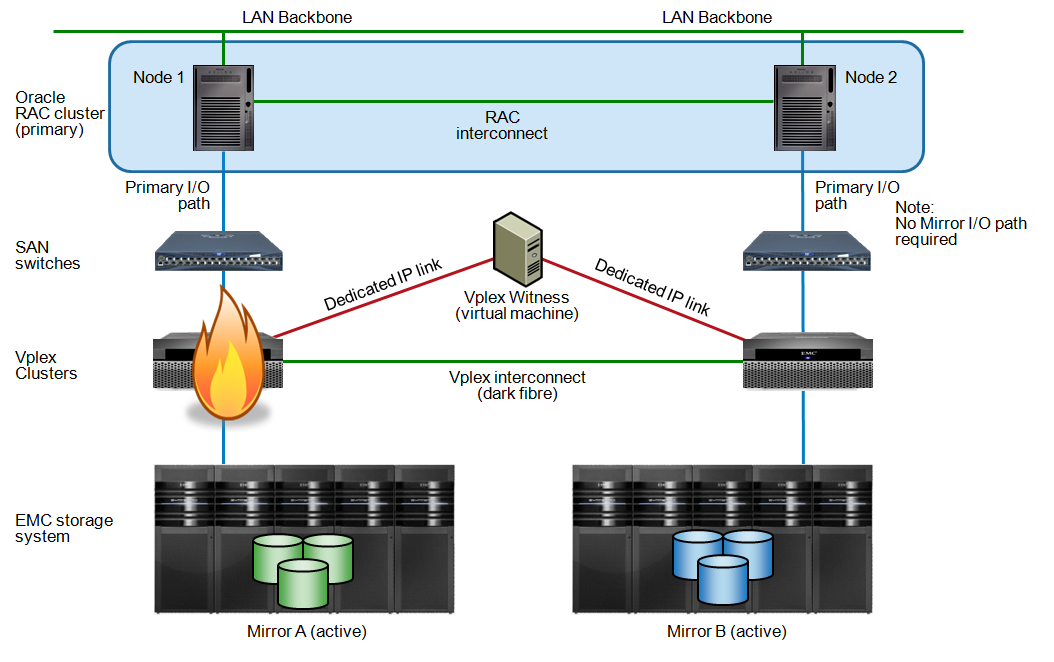

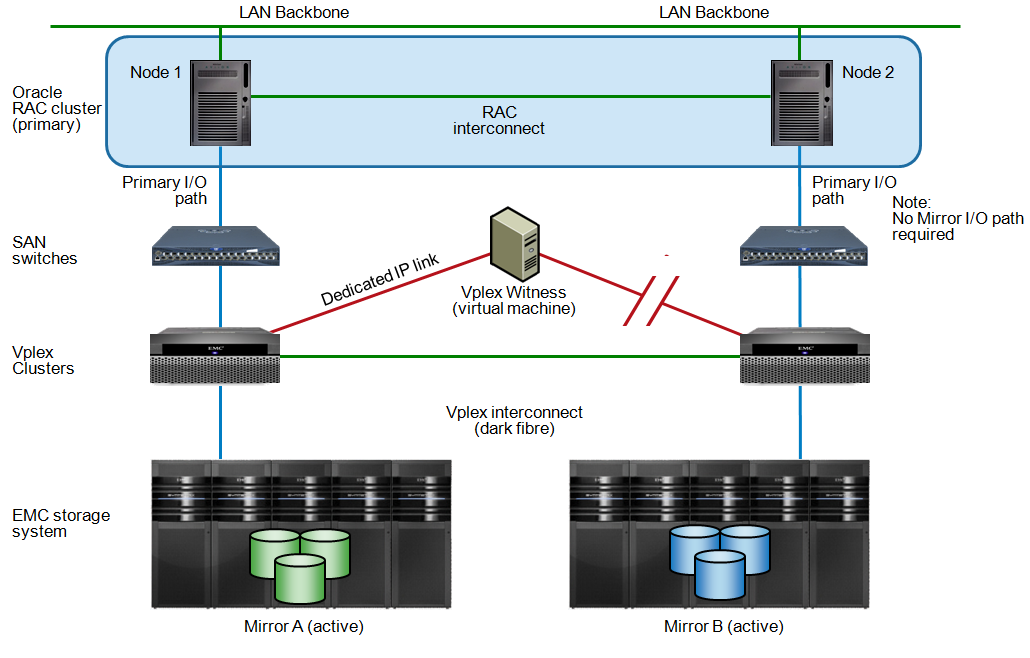

VPLEX cluster failure

If a VPLEX cluster at one of the sites would become unavailable, the VPLEX witness will notice and let the remaining node be active. A side effect is that the local RAC node would go down as well – as would be the case when suffering from site failures, storage network problems and so on.

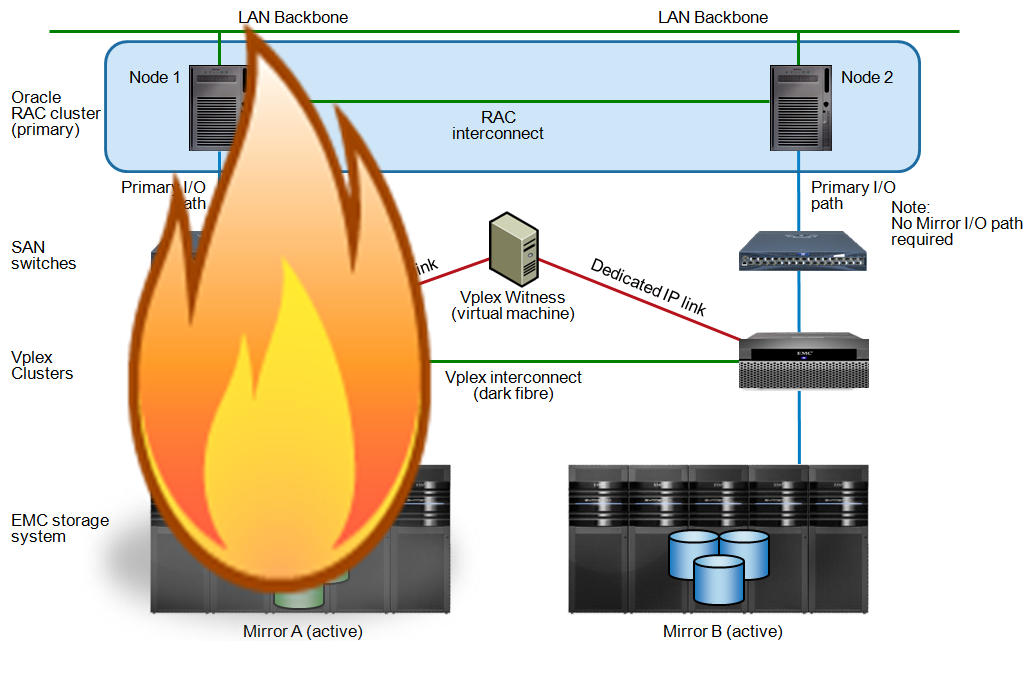

Site failure

Site failures are similar in detection (not in nature) to a cluster failure and the result will be the same – the surviving site will keep running.

Recovery from failures

When the storage system or VPLEX cluster at the failed site comes back online, VPLEX will start with incrementally re-silvering the storage system until both are in sync again. Of course under the assumption that the failed site still has good equipment that did not go up in flames and did not experience data corruption or loss (otherwise you would have to rebuild everything)

As this involves no host I/O (like with ASM redundancy) and resilvering will be a background task with lower priority, the host I/O impact during rebuild will be minimal. In case the VPLEX witness would crash or become unreachable, the remaining VPLEX clusters can keep running as long as they can communicate.

Interconnect down

If the interconnect between the VPLEX nodes breaks, then either site could continue processing – but not both. The tie breaker must decide which site will be suspended.

Witness link down

If one of the dedicated link to the VPLEX witness would break, everything would continue processing as normal. A second failure however *could* cause the entire database to go down, depending on the situation.

In my previous post I pointed out a number of requirements for storage federation that would be the bare minimum for true stretched clusters. I will try to match these requirements with the VPLEX solution.

As simple as possible – but not simpler

Achieved by hiding storage complexity in VPLEX. The database nodes can connect to the storage as if it was a local shared SAN without replication.

Keeps running, without failover or recovery, in all possible failure scenarios (including rolling disasters)

Check – of course as long as the failures are limited to one location only. For regional disasters you need true Disaster Recovery (Stretched Clusters are a High Availability solution). Be aware that having the tie breaker (VPLEX witness) in a dedicated location and connected with dedicated network links. They don’t have to be big bandwidth by the way, keeping cost to a minimum (note I never will say “cheap”

Still provides redundancy on the surviving location after a site failure

Done by the storage subsystem (preferably EMC) that still has RAID protection, multiple processors, etc. VPLEX itself is redundant because it is always deployed as a cluster in itself.

Is protected against split brain issues

In any case where the two VPLEX clusters cannot communicate, one of the two has to suspend I/O immediately and shutdown if the issue is not resolved. This way you prevent split brain – and even better – you’re not depending on the database clusterware anymore!

Is protected against silent (or screaming) data corruptions

EMC storage goes beyond standard storage architectures in preventing data corruption, I’ve discussed that in earlier posts. VPLEX prevents data corruptions because it is based on the same architecture as EMC VMAX (the high end storage) and does not allow write caching (ever). All writes have to be acknowledged in all cases before continuing.

Keeps performance impact to a minimum

Yes, by offloading replication from the database server to the storage infrastructure. And by adding read cache, that keeps working even if the local storage is unavailable. And finally, by reducing performance impact when rebuilding data after a failure situation.

Is capable of recovering to normal mode of operation without serious impact on service levels

As in the previous statement. VPLEX can do differential re-sync of data (even if data was modified on both sites).

Allows failure testing without downtime

Can be done by disconnecting storage on one location and reconnecting it to a test host. Today it will probably be not so straightforward. Should be better in future versions.

Then the additional requirements:

Does not restrict existing functionality (such as snapshots, backups, etc)

This is currently not implemented (yet) by VPLEX (probably will be in future versions). Currently you can use the storage replication features (EMC Timefinder or Recoverpoint) to provide this. Caveat: to do that you need to configure VPLEX in such a way that it does not do any volume management (such as creating RAID, or concatenating volumes, etc). The storage volumes have to be provided in a 1:1 fashion from storage to the server. EMC Engineering do not tell me everything but I expect this restriction to be solved in the future (all disclaimers apply). If you need this, let us know and we will push EMC Engineering to move priorities

Allows failure testing without compromising continuous availability

This also depends on snapshot capabilities. Again, if you need this (and I strongly recommend that you do) push us to prioritize this feature.

Is a generic solution for multiple operating systems, databases and/or applications

Check. Does not matter if you implement this with Oracle or something else. Better even, if you have multiple databases, they will all fail over (or keep running) at the same site (instead of each cluster deciding where it will survive and therefore compromising an app server at site A talking to a database server at site B). Also it does not completely depend on server cluster-ware (which is notoriously unstable, error-prone and tricky to set up).

Allows for business application landscape consistency

If a group of applications and database share a consistency group, then the whole group fails over. This prevents business level “split brain”. By the way, this is a hard to understand matter. Let me know if you want to find out more about this.

So, now that we have a continuously available solution for the database, consider the middleware and application stack (what good is a database if there is no application that can use it?)

This concludes my series on Stretched Clustering with Oracle. If you find it interesting and you consider such a setup in your company, let me know and I will provide you with more information.

![]()

Comments are closed.