One of my missions is to help customers saving money (Dirty

One of my missions is to help customers saving money (Dirty Cache Cash). So considering the average enterprise application environment, I frequently ask them where they spend most of their IT budget on. Is it servers? Networks? Middleware? Applications?

Turns out that if you look at the operating cost of an Oracle database application, a very big portion of the TCO is in database licenses. Note that I focus on Oracle (that’s my job) but for other databases the cost ratio might be similar. Or not. But it makes sense to look at Oracle as that is the most common platform for mission-critical applications. So let’s look at a database environment and forget about the application for now.

Let’s say that 50% of the operating cost of a database server is spent on Oracle licensing and maintenance (and I guess that’s not that far off). Now if we can help saving 10% on licensing (for example, by providing fast and efficient infrastructure), would that justify more expensive, but faster and more efficient infrastructure? I guess so.

So let’s focus on the database licence cost. I won’t go too much in details but many Oracle customers license their database by CPU using Oracle Enterprise Edition. The more CPUs (actually, CPU cores) the more licenses you need. Database options add to that cost (if you have a bunch of options then the options might be more expensive than the base Enterprise Edition license). And don’t forget the yearly support and maintenance that adds to this – in 3 to 5 years your maintenance might have cost you as much as the initial purchase.

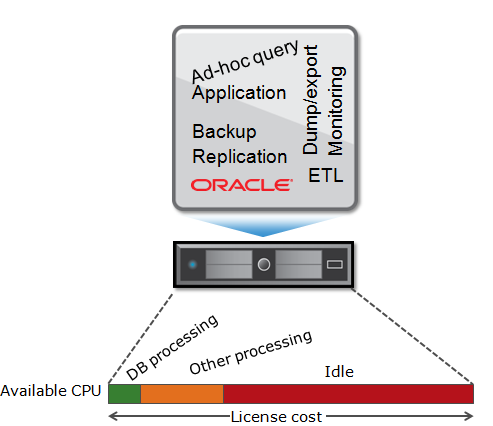

Now databases are used for sophisticated processing of business transactions – so if the investment in the software is put to use and not wasted, you don’t hear me complain. But the reality is much different.

The average database server that my customers have, tend to average between 10% and 20% CPU utilization. Yes – sometimes there are peaks and sometimes the peak utilization is 100%. But you pay licences all the time whatever the utilization of the server might be.

So instead of looking at the total cost you also could calculate the cost per (business) database transaction. The lower the cost per transaction the better of course. Now on a licensed database server that does no business transactions at all, the cost per transaction is… infinite!

The lowest cost per transaction for that server is when the server is working hard, 24 hours per day, 365 days per year at 100% and spends all the CPU cycles on database transaction processing.

Say the average CPU utilization of a specific database server is 20%. Does that mean your server is processing business transactions in the database 20% of the time? Often not. Consider a server running some kind of operating system, with database software installed and running. What else consumes CPU cycles on the server? To name a few:

- Host based replication (for example, Oracle Data Guard, ASM mirroring, LVM redundancy, and the like). The overhead is not huge but given the high cost of licenses even 2% overhead is too large to ignore

- Backup. A full backup needs a significant portion of CPU cycles (and I/O on network and storage, for that matter). It does not add anything in terms of business value (unless you need the backup when data is lost, of course)

- Ad hoc queries. You might consider this “business value” transactions but the reality is that those queries are often not tuned and sophisticated so their inefficiency causes CPU spikes and possibly things like full table scans (memory and I/O consumption that causes other processes to slow down, etc)

- Some people run the application processes on the database server. There might be good reasons to do so; for example the IPC (inter-process communication) does not need networks but can be done in-memory which speeds up the app. But the app server now consumes expensive CPU cycles reducing available resources for the database

- Database Dumps/Exports (to fill test databases, for example). No value-add for the business (at least not direct)

- ETL (Extract, Transform, Load) for loading the Data Warehouse. This can add significant CPU load that again does not contribute directly to business processing

- Monitoring agents (such as Tivoli, Unicenter, BMC and the like). Maybe just a few percent, but remember even one percent is expensive

- Etc….

So a server indicating 20% utilization might only spend 10% or even less on useful database processing, the rest is wasted idling or spent on non-database processing tasks.

Now say you have a database server expensively licensed for 100% on the CPUs and wasting 90% on idling and other stuff. How did we come so far?

Well, for starters, sizing a new server for production workloads is extremely hard impossible. So either people don’t do sizings at all but use gut feelings, or try sizing but the accuracy of the sizing is so lousy that whatever CPU requirements comes out of the sizing is doubled “just in case”.

And the sizing must be done based on peak loads, not averages. If the peak load is year-end processing that takes 3 days per year and the rest of the year the system requires much less horsepower, then so be it.

But that’s old school. Today with ever increasing demand for more and bigger databases, with more workload on the database that increases faster than you can make up with using Moore’s law, and the drive for more efficiency in today’s economic climate, something has to be done.

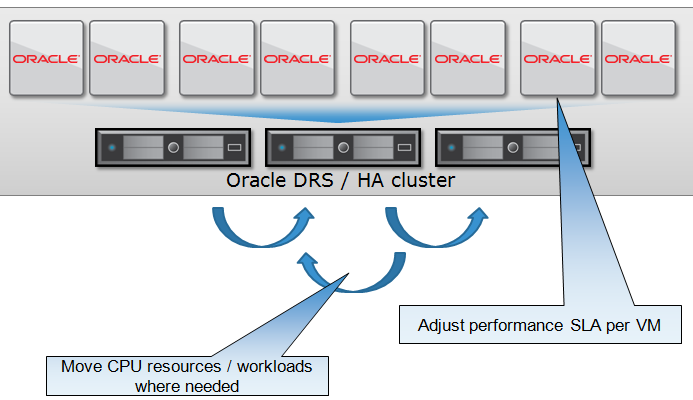

Now the average data center does not have just one database server. Even the smaller companies run a bunch of them. Even a single enterprise application on a single database in production is often supported by testing, development, acceptance, training, staging, reporting or firefighting environments, to name a few. And you typically do not have just one database application. So as an example, let’s look at a small IT environment with 8 database servers, and let’s assume these servers all have equal hardware specs (so they have the same memory, number of cores, OS, CPU speeds etc). Each server runs a single database.

Given that we sized for peak loads, do you think the servers all peak at the same time? I don’t think so. A development system peaks a few times during working days. Assuming no overtime of the developers, that’s 5 x 8 hours = 40 hours (a week has 168) so even if the server would run at 100% during working hours (which is very unlikely) then the average utilization is still less than 25%. And then you have holiday periods and other off-days.

The reporting server, would that one run peak all the time? Guess not. Probably a few times a week – maybe from 12am to 5am for running the daily reports. The training server? Don’t needs explanation. Acceptance? Only active just before going live with new application versions or modules (few weeks a year?) And on and on. So servers don’t all peak at the same time and some servers have much lower utilization than the heavy production machine. So if you add up the current load at any time on the landscape, isolate the peak load of the landscape, and divide by the number of servers, what is the overall utilization of the landscape? Probably still less than 30%. What I mean to say is that 1/3th of the total CPU power is enough for handling the peak load of the whole landscape at any given time. The problem is you cannot reduce 2/3 of the cores on all servers because there is no way to use the CPU on server 8 for production workload at server 2 when you need it.

Unless you virtualize the environment. You put every database on a separate virtual machine and share workloads on the virtual farm. So lets say that database 1,2,3 share server 1, and 4,5,6 share server 2 and database 7 and 8 run on server 3. Now server 2 (our production VM) suddenly needs more horsepower. What methods do you have to make that happen? You can:

- Migrate database 1 and 3 (online) to another physical machine using live migration, so that database 2 has the machine all to itself

- Manage SLAs using CPU shares so that database 2 gets more CPU slices than the others on the same machine

- Add a recent server with faster CPU’s (there’s Moore’s Law again) to the farm, migrate the VM to the new machine and enjoy the performance increase!

Frankly, I don’t mind which virtualization strategy you plan to use to achieve this. As long as you get the maximum utilization on the CPUs for real database processing. In the real world there are many subtle differences between virtualization products and it is beyond the scope of this blogpost to discuss. But free virtualization products might cost you more in the end than the leading product in the market today, just because the minor savings in virtualization licenses end up in limited consolidation ratios of your databases – requiring more servers with larger numbers of cores.

Frankly, I don’t mind which virtualization strategy you plan to use to achieve this. As long as you get the maximum utilization on the CPUs for real database processing. In the real world there are many subtle differences between virtualization products and it is beyond the scope of this blogpost to discuss. But free virtualization products might cost you more in the end than the leading product in the market today, just because the minor savings in virtualization licenses end up in limited consolidation ratios of your databases – requiring more servers with larger numbers of cores.

Do the math 😉

![]()

Comments are closed.