In previous posts I have focused on the technical side of running business applications (except my last post about the Joint Escalation Center). So let’s teleport to another level and have a look at business drivers.

In previous posts I have focused on the technical side of running business applications (except my last post about the Joint Escalation Center). So let’s teleport to another level and have a look at business drivers.

What happens if you are an IT architect for an organization, and you ask your business people (your internal customers) how much data loss they can tolerate in case of a disaster? I bet the answer is always the same:

“zero!”

This relates to what is known in the industry as Recovery Point Objective (RPO).

Ask them how much downtime they can tolerate in case something bad happens. Again, the consistent answer:

“none!”

This is equivalent to Recovery Time Objective (RTO).

Now if you are in “Jukebox mode” (business asks, you provide, no questions asked) then you try to give them what they ask for (RPO = zero, RTO = zero). Which makes many IT vendors and communication service providers happy, because this means you have to run expensive clustering software, and synchronous data mirroring to a D/R site using pricey data connections.

If you are in “Consultative” mode, you try to figure out what the business really wants, not just what they ask for. And you wonder if their request is feasible at all, and if so, what the cost is of achieving these service levels.

Physical vs Logical disasters

First of all, there is a distinction between RPO/RTO for physical versus logical disasters – or otherwise, data unavailability versus data loss. An explanation from a few examples:

- A data center power failure leads to data unavailability. The data is still there but you cannot get access to it. This is a physical disaster leading to unavailability.

- A fire in the data center burning the systems. The data is damaged and whatever you do, it will not become available again. You have to rely on some sort of copy of that data stored elsewhere to be able to recover. This is a physical disaster leading to data loss.

- A user causing accidental deletion of transactions or files. This is a logical disaster leading to data loss.

- A software bug in clustering software causes a complete cluster to go down. This is a logical disaster leading to data unavailability.

Logical disasters don’t require additional data centers with additional hardware to recover the data. If you somehow make frequent copies of the data (commonly known as a “Backup” strategy although more innovative and elegant ways are becoming available) then you can recover locally.

Physical disasters do require a second (standby) data center with the appropriate hardware to be able to recover, at least if you can not settle for many hours, or even days, to get back online. The standby data center should have access locally to some valid, recent copy of the data because just the hardware alone is not sufficient to be able to recover.

Let’s focus on physical disasters for a moment.

Zero dataloss – Zero downtime

Regarding RTO, some recent innovations (true active/active stretched clusters) make it possible to survive physical disasters without having to restart or recover databases. But this requires expensive clustering software, some additional hardware and low latency connections, and today it does not always cover the application servers so there might still be some business impact. But if the business can live with a few minutes of downtime, then active/passive clustering might be much more cost-effective (less “dirty cash” spent) . However, it requires you to go in consultative discussion with the application owners to figure out what the business impact is of a few minutes downtime.

Regarding RTO, some recent innovations (true active/active stretched clusters) make it possible to survive physical disasters without having to restart or recover databases. But this requires expensive clustering software, some additional hardware and low latency connections, and today it does not always cover the application servers so there might still be some business impact. But if the business can live with a few minutes of downtime, then active/passive clustering might be much more cost-effective (less “dirty cash” spent) . However, it requires you to go in consultative discussion with the application owners to figure out what the business impact is of a few minutes downtime.

Regarding RPO, the consensus has always been that synchronous data mirroring (or replication) of some sort covers all your needs. Actually, EMC was the very first to offer synchronous storage mirroring with EMC SRDF, around 1995. On the storage level, this guarantees “zero data loss” because every write I/O to the primary (source) storage system will only be acknowledged if the data also is delivered and confirmed by the standby (target) system as well. As long as the data link is functional the source and target systems are always 100% in sync so any transaction written from the primary application is guaranteed to be written to the target (D/R) site as well. If a (physical) disaster strikes, the database will abort (crash) and has to be restarted on the standby site using the mirrored dataset. For non-database data, the file systems (holding flat files) will have to be mounted at the standby site as well – but all data is presented exactly the way it was just before the disaster struck.

So far for the theory. Let’s see how reality catches up and how Murphy’s law messes up our ideal world.

Consistency, Commits & Rollbacks

First of all, RDBMS databases (based on ACID principles) use a concept of apply and commit (and sometimes rollback) for transactions. So an application may create or update a transaction record but not commit the transaction for a while. If the database is aborted (normally or abnormally) before the application commits the transaction, then in order to maintain consistency, the database will have to “rollback” this transaction to the old state. This means that on a database level, zero data loss is only valid for committed transactions. Any non-committed transaction will be lost (due to rollback). This concept has serious implications for applications. If you want real end-to-end zero data loss for your database application, this means the application code has to be written in such a way that every transaction that cannot be allowed to get lost, needs to be committed immediately.

If an application violates this law (due to performance optimizations, or maybe developer laziness/unawareness) then zero data loss is not 100% guaranteed.

So in theory, with perfectly written application code, this should not be an issue. Unfortunately I haven’t spotted such an ideal application in the wild during my entire IT career

Some database applications commit transactions in application server memory. Some keep transactions open (uncommitted) for long periods of time. Some applications keep transactional data outside of the database in flat binary files on some file system. Just something we have to live with. Oh by the way, the fact that you buy your business applications from well-known application vendors instead of developing your own, does not guarantee anything in this context…

So be prepared that even with zero dataloss (synchronous) mirroring, you might lose some data after a disaster. That said, most enterprise RDBMS’s make sure that the data is consistent after the failover.

Zero data loss on file systems

Now let’s talk about an even bigger myth: file systems. In the old days, file systems needed scanning for consistency problems after a crash (remember Windows CHKDSK, CHKNTFS, UNIX fsck and “lost+found” and those checks running for hours and hours after a crash?). In order to overcome this, many modern file systems have journaling capabilities that behave very much like database logging features. They make sure that if the filesystem metadata changes, the change is written to the file system journal so that if the file system has to be recovered after a crash, it does not have to scan the whole data set. Instead, it quickly re-applies the changes from the journal and within a few seconds, you’re back in business. So it may seem as if journaled file systems prevent data loss. The reality is much different…

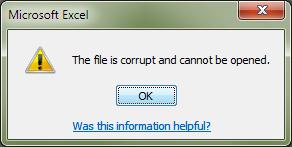

Consider a file server used for saving large Office documents. You as an end user open a large (i.e. 10MB) PowerPoint document and start editing. Once done, you hit “save” to commit your changes to the file server and underlying file system. The PowerPoint application now starts to overwrite the complete document, but by the time it is half-way at 5 MB, the disaster strikes and a fail-over is triggered. The standby server mounts the mirror copy of the file system, only to find that it was not cleanly unmounted and it replays the journal to make the file system consistent again. It finds the new PowerPoint file size, modification times, and other meta data and re-applies those to the recovered file system. But the 10 MB file now holds 5 megabyte of a half-saved PowerPoint, then 5 more megabytes of the old file before it was overwritten.  What do you think will happen if you try to open that PowerPoint presentation again?

What do you think will happen if you try to open that PowerPoint presentation again?

In an attempt to work around this problem, some file systems offer “data in log” mount options. This means not just the metadata (access/modification time, file size, owner/group etc) but also the data itself is written to the journal before it is written to the real file. But this does not prevent files to be half-written (unless the journal is only flushed to the real file after the application closes the file handle). The performance overhead of this mount option is huge and hardly anyone uses it. To add to the confusion; some really modern file systems seem to overcome this problem because they always write to a journal (like SUN ZFS) but still you risk partially written files. Don’t fall into this (marketing) trap.

Local vs Remote recovery

Now to clear up some other myth: The root cause of these data corruption / transaction loss issues are caused by the application and system design. If the system is designed end-to-end to be able to survive power failures without data loss, then EMC replication tools (SRDF, Mirrorview, Recoverpoint and the like) can achieve zero data loss as well but now over distance. Sometimes I hear the claim that even with EMC mirroring you might not get consistency after a fail-over. But if that’s the case, then the same is true for server crashes and power failures, and the root cause of the problem is in the application stack, not in the replication method. If your application can survive a server crash without data corruption, then it does not matter if you recover locally or at the D/R site (given that you use consistency technology for replication).

So we have come to the conclusion that real zero data-loss is (mostly) a myth – unless your application stack is “zero data-loss compliant” (I don’t have a better term for this) end-to-end which is rarely the case.

Disaster in theory versus reality

If you read white papers or marketing materials on D/R products, you will often see the benefit of such technology assuming one huge disaster. The classical jumbo jet crashing on your data center. The gas explosion. The massive power outage. In all of these cases, the assumption is often made that you go from a fully functional data center to one completely in ashes within one microsecond. The reality is much different. At EMC we call these real disasters “Rolling Disasters“. It means; a problem starts small and then becomes more and more serious over a period of time (minutes to hours). To make my point, consider a fire in the data center that starts small in a corner. The fire starts getting bigger and burns some of the WAN links that are used for D/R mirroring to the other data center. Replication is suspended but typically the policy is set that production is allowed to continue processing. Five minutes later the fire reaches the servers and the application goes down (I won’t go as far as pointing out that a server that is running hot might cause data corruption before finally giving up – due to overheated CPU, RAM, motherboards, I/O adapters and melting cables). So in spite of your expensive zero data-loss mirroring, now you have a primary data center with more recent data than the (consistent) copy in your D/R site. You now have to fail over and accept a few minutes (or more) transaction loss… (note that at EMC we have some innovative solutions to work around this problem – but that’s beyond the scope of this post).

By the way, if you are able to extinguish the fire and repair the servers (assuming the storage is still there) how are you going to fail back? You now have written data updates independently on both locations – so many replication tools will fail to perform incremental re-sync – or worse, causing silent data corruption when failing back…

Synchronous or Asynchronous

So why do we still pursue synchronous mirroring for applications? Especially when synchronous writes cause a performance penalty (i.e. the write has to be sent to the remote D/R system and the acknowledge has to come back before we can proceed with the next transaction)?

My take is that this is mostly a legacy issue. EMC was the first with synchronous mirroring and so it became the de-facto standard for many organizations. Other vendors catched up with EMC and partially have overtaken EMC by offering asynchronous replication. With async replication, you don’t wait for the acknowledge and just proceed with the next transaction, so theoretically there is no longer any performance impact. The problem was, that the order in which you process write I/O is critical for data consistency. In summary: if you change (randomize) the order of writes, your data on the D/R site is completely worthless and not valid for business recovery. In order to maintain transactional consistency, competing vendors had to implement either ordering of write I/O’s – resulting in the replication process to become single-threaded causing huge bottlenecks – or labeling every single I/O with a time-stamp or sequence ID – causing massive overhead on the replication links. Both methods turned out to be too impractical for high-performance environments.

Some asynchronous replication implementations did not guarantee I/O ordering and these were basically useless for a valid D/R strategy. The existence of such methods probably caused the myth that Asynchronous replication is no good at all.

All this is why EMC has waited a long time before offering an asynchronous mirroring option (SRDF/A), and we finally made it feasible to achieve consistent asynchronous mirroring without performance impact, basically by using memory based apply/commit buffers and some sort of checkpointing (I’ll skip the details but just assume it works as designed).

The RPO that we can achieve with Asynchronous replication is twice the cycle time of the checkpoints. So if you set the cycle time to 1 minute, then your RPO will be 2 minutes (worst case). This means after a fail-over you lose 2 minutes worth of transactions. The data is still 100% valid and consistent. The RTO (fail-over/recovery time) does not change much. So asynchronous mirroring is not bad for many applications (given that only a few years back we relied on Sneakernet and tape restores – driving the RPO up to typically 24 hours or more – if the restore succeeded which wasn’t even always the case.

Business requirements

So if (EMC) Asynchronous replication guarantees transactional consistency, and only causes RPO to go from zero (theoretically) to a few minutes, and it reduces application performance overhead to zero, then why are most organizations still pursuing 100% synchronous replication?

IMHO, for over 95% of all business applications, asynchronous mirroring is more than sufficient. The exceptions are those environments where a second worth of transactions represents a large business value – this is mostly the case for financial processing where a single transaction can represent millions of dollars (or any other currency) but I can think of a few non-financial applications where the value of transactions is very high as well.

Back to the business people again asking for the myth of zero data loss. Ask them what the business impact is of losing the last 5 minutes of data. Can they express that in a money value? Although this is hard, you can at least attempt to calculate an approximate based on some assumptions.

Now if the cost of synchronous replication (the performance impact, the more expensive replication links, the extra application/system tuning required etc) is higher than the value of those few minutes worth of transactions, then my recommendation is to go with asynchronous replication (which will be the outcome, as said, for over 95% of all cases).

This is not the easy way because it demands you to go in consultative discussions with the business people in your organization. But the reward is there: cost savings and performance improvements (hey, that’s why I started this blog).

The alternative is giving the business what they ask for, paying the price, and then watching business people moving to cheap cloud service providers (who might not offer zero data loss and high availability at all) while complaining their IT department is expensive and not flexible.

Final myth: Disasters are very unlikely

By the way, the picture on top of this post is from the Costa Concordia, and I made a cruise on that ship with my wife 6 months before the disaster. I still remember us one evening, slowly walking on the side deck in the middle of the Mediterranean sea, looking at the lifeboats over our heads, and me saying to her that they would probably never use those because modern cruise ships are very safe…

Update: I was informed by one of my customer contacts (thanks Simon!) that I made it appear as if SRDF/Asynchronous was a new feature of EMC. In order to avoid confusion: The Asynchronous mode (with consistent deltasets) was introduced around 2001/2002 (check here) so it has been around for a while

![]()

Comments are closed.