Although EMC and Oracle have been long-time partners, the Exadata Database Machine is the exception to the rule and competes with EMC products directly. So I find myself more and more in situations where EMC offerings are compared directly with Exadata features and functions. Note that Oracle offers more competing products, including some storage offerings such as the ZFS storage appliance and the Axiom storage systems, but so far I haven’t seen a lot of pressure from those (except when these are bundled with Exadata).

Recently I have visited customers who asked me questions on how EMC technology for databases compares with, in particular, Oracle’s Hybrid Columnar Compression (HCC) on Exadata. And some of my colleagues, being storage aliens and typically not database experts, have been asking me what this Hybrid Compression thing is in the first place.

Now there are plenty of sources on the internet explaining some basics but I tried to simplify it down so that even storage savvy people can understand. And I attempted to compare it with EMC offerings. I wrote an internal EMC post for my peers on how they can handle customer questions on HCC. Many of my colleagues suggested I should post it on my public blog as well, so here it is – a bit modified of course to make it suitable for public access.

Note that I don’t have an Exadata in my basement to play around with so everything I know is from blogs, Oracle documentation, internal EMC conversations, Wikipedia, etc. I deliberately simplified things down but still I might make (unintended) incorrect statements – if so, feel free to let me know and I will update my post accordingly.

For storage aliens like us on planet database, to understand HCC, we have to get a grip on what a database table looks like in the first place and how it is stored on disk.

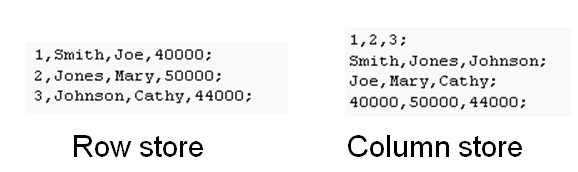

Let’s start with the classic RDBMS (that’s geek language for “database”) style of storing tables in data files and eventually on disk (ignore indexes for a moment). Let’s say we have an employee table that holds Employee ID, Last name, First name and Salary (4 columns of which 1 is of type Integer, 2 are of type text string, and 1 is floating point). Here the example (from http://en.wikipedia.org/wiki/Column-oriented_DBMS – which is an excellent article on columnar store databases if you want to learn more):

| EmpId | Lastname | Firstname | Salary |

|---|---|---|---|

| 1 | Smith | Joe | 40000 |

| 2 | Jones | Mary | 50000 |

| 3 | Johnson | Cathy | 44000 |

An OLTP style application that processes one (entire) record at a time works best by having all data for one record (a table “row”) together in a database block. Note that Oracle’s current default block size is 8K so in that block you can hold a bunch of employee records (next to some metadata overhead). So in order to minimize disk I/O for OLTP you would store “1, Smith, Joe, 40000” as close together as possible so that 1 disk I/O of 8K can (at least) read all data for that employee.

This is only a few bytes so you would probably store a few hundred of such employee records in an 8K block until it is full, then start filling the next one, etc. (note that storage management in Oracle is very complex and my way of describing it is extremely oversimplified). This method of storing data is called row-oriented database or row projection. Most traditional databases use this (i.e. Oracle, DB2, MS-SQL, MySQL, etc etc)

Now you want to save space and want to compress data. So you start compressing the data using, for example, Zlib or run-length encoding. You compress “1, Smith, Joe, 40000” then “2, Jones, …” etc. You might get 1:3 compression that way and store 3 times as much data in the same 8K Oracle block. Not bad!

That is what Oracle Advanced Compression (note: that is not the same as Exadata HCC!) does and is available for every platform as of version 11g (at additional license cost BTW).

Now say you have billions of employees (OK, agreed, this is very unlikely even for the biggest companies, so let’s say these are really transaction records but that does not matter for the example). And you have a data warehouse (versus an OLTP application) that runs a query to find out how many employees having a last name starting with ‘J’ have salaries greater than 43000. In that case, you need to scan much more data than a few blocks. Ignoring indexing and other optimizations for now, you would need to read every last name and salary record of the whole table (a full table scan). Billions of rows, therefore millions of DB blocks. But every time you read a block, you also transfer the employee IDs and First names in those blocks from disk to server memory (you can transfer only full 8K blocks, not parts thereof), although you are not interested in those because of the query, so you’re reading much more disk data than you would actually need.

Can we do this smarter? Yes (but there are drawbacks as we will see). Let’s store all values of the same type together. So we store only employee ids in DB blocks until we have stored all of them, then all last-names, then all first-names, then all salary fields. Now if we want to read all last-names, we read DB blocks with only last-names (no needless transfer of first-names, emp ids and salaries). As the blocks are (if we design it right) sequentially stored on rotating disk, we actually generate full sequential disk reads if we read all last-names (think about that for a moment because it is crucial for understanding data warehouse I/O behavior). Now you understand why data warehouses are bandwidth restricted, and IOPS don’t really matter (ever tried to figure out how much sequential IOPS a silly, slow SATA disk can do? Probably much, much more than you would guess at first ;-)

This is called columnar (oriented) storage.

Furthermore, by having this way of storing table data and given a query like we mentioned before (“how many employees having a last name starting with ‘J’ have salaries over 43000”) will not even touch the disk areas where employee IDs or first names are stored. So by using this storage method you will do less disk I/O and do them faster. Only reading the columns you need and ignoring the rest is referred to as “columnar projection”.

But the drawback is that this does not work well for (OLTP) applications that manipulate single records. Again notice the difference between database systems optimized for huge queries, versus databases optimized for many small requests and updates (anyone care for an Utopian database machine that is supposed to the best in both worlds? 😉

Back to compression again. Let’s assume you compress the data in the columnar store. You apply compression against “1,2,3” (short integers) then “Smith, Jones, Johnson” (text strings), then “Joe, Mary, Cathy” (more text strings) then 40000,50000,44000 (say these are all floating point values). Do you think this compresses better than “1, Smith, Joe, 40000” and so on (mix of data types)? You bet it does. Typically with columnar store you can expect compression ratios of 1:10 (conservative) or even higher than 1:20 (Oracle claims 1:22.6 for HCC).

Drawbacks? Yup. Say you compressed “Smith, Jones, Johnson” and now you want to change “Jones” into “Verylonglastname”. Now you cannot store the new last name because it is compressed without space for larger byte sequences, and changing it would require you to decompress the entire set of last names, change ‘Jones’ into ‘Verylonglastname’ and compress everything back. So columnar compression will not work well (actually, not at all) for data that is modified all the time. Only for store-once, read-only data. (Ever tried to do random I/O on a file stored in a WinZIP archive? If so, let me know if it worked 😉

In an attempt to get the best of both worlds, Oracle created a hybrid between columnar and row projection. In one DB block, they will compress a bunch of values from type A, then a bunch of type B, then of type C, and put some reserve space in the DB blocks to allow for (limited) inline modification of data without having to decompress/re-compress the entire column. They called it Hybrid Columnar Compression (HCC) and is only available for Oracle Exadata. (Again, I heavily over-simplified as the real HCC implementation is much more complex so please don’t flood me with comments on this).

So now the trick question: Why can’t we use this for an Oracle database connected to EMC storage? Why is it only available for Exadata?

Answer: Oracle decided to artificially restrict HCC to Exadata so that data warehouse customers running Oracle would be forced to buy Exadata if they wanted to use this feature. HCC was originally planned as standard Oracle 11g release 2 feature for many platforms but then decided to limit it to Exadata only.

Oracle might claim that the Exadata architecture is required for it to work (accompanied by lots of marketing messaging on their storage cell software etc) and some of those claims are half-true (i.e. HCC can be optimized by the Exacell software and in some cases, offload the compression, but it would technically run fine with EMC or any other storage).

Actually, since a while, Oracle started to allow Exadata HCC compressed data on their own storage offerings (the SUN ZFS appliance and the Pillar Axiom SAN boxes if you connect them to Exadata) which proves that HCC can technically work with any storage, not just Oracle’s. Neither the ZFS appliance nor the Pillar box have the special database offloading functions that the Exadata storage cells have.

So how do we at EMC match up with HCC? We can offer two distinct areas were we think we have an excellent alternative.

If our customer is open to consider alternatives to Oracle database, we offer Greenplum. Greenplum has a feature called “polymorphic storage” (agreed, marketing might have come up with a better name) which, in my strong opinion, is much better than HCC (for reasons that I cannot explain without writing another few pages of technical boring stuff, plus, the EMC/Greenplum guys can explain it much better than I can, so ask them!).

But in short: Greenplum’s implementation of columnar store is pure (no hybrid) which has the advantage of being more efficient (less excess I/O, depending on your query) but at the disadvantage of not being able to easily update a single row in a columnar table. This is where the “Polymorphic” part comes in. In Greenplum, for example, you can have a single table that has:

– row storage, no compression (fast for OLTP-like updates), say, for the most recent 3 months of data

– row storage, moderate compression (less fast but a bit more space efficient) for, say, anything between 3 months and a year old

– column storage, light compression for any data 1-3 years old

– column storage, heavy compression for any data over 3 years old

And if you find that, for example, you have many updates on data 3-6 months old and this is slow due to the compression policy, you just change the policy so that the non-compressed tier applies to 0-6 months old data, re-distribute the data (one command) and you’re done. This is real ILM (tiering) in databases and Oracle has no such thing (except by attempting to implement it yourself the hard way using partitioning etc).

If a customer can or will not consider Greenplum, and has to stick with Oracle for whatever reason, then either he will have to buy Exadata, or cannot use HCC. It’s that simple (for now, until an influential customer forces Oracle to go open with HCC allowing 3rd party vendor integration, much like they did with Oracle on VMware support recently). So let’s see what HCC really brings us in comparison with EMC solutions.

Let’s assume for a moment that Oracle’s claim of, say, 1:20+ compression is true (which in reality is not, unless the customer, by magical coincidence, has highly compressible data, which tells us something nasty about the DWH implementation itself IMO).

An Exadata customer has choices to make:

- SATA 2TB disks or SAS 600GB disks (no mix & match, one size fits all)

- 2-way mirroring (compromising both data availability and data integrity) or 3-way mirroring (significantly driving up the already very high cost)

In my experience, many customers will choose SAS disks for performance and if availability is of any concern for the business (OLTP consolidation?) they will have to go with 3-way mirroring.

Assume the database has 30 TB of (uncompressed) data of which 25 TB can be stored using HCC (the other 5 can not).

After HCC compression the 25 TB will be reduced to 1.25 TB but due to 3-way mirroring be expanded again to ~ 4 TB (raw disk). The data not on HCC (5 TB) will expand to 15 TB (3-way mirroring, remember?) Totalling 19 TB (say 20TB because of some metadata overhead etc). So the actual DB to raw disk ratio is 25:20 is 20% data reduction.

Now EMC. We don’t have HCC but we could use Oracle Advanced Compression, only giving us 1:3 compression so the 30TB will become 10 TB for all data.

If we can use our ILM and storage tiering strategies, then some of that data may live on SATA disk (much more cost effective than Oracle’s SAS – oh and BTW – remember that IOPS don’t matter in data warehouses? Bandwidth counts! So SATA disk might do the job just fine even for huge queries). Let’s say we implement our de facto strategy of 5% EFD (flash drives), and say 30% high-iops SAS/FC and 65% SATA. And we use a mix of RAID-5 (FC) and RAID-6 (SATA) for protection (not 3-way mirroring) so our 10 TB usable will expand to maybe 15 TB raw (hey, that’s less than Oracle’s 20 TB!?!? 😉

Now, let’s say your customer wants to create a daily copy for reporting, application testing, or whatever. How about space efficient clones? Storage snaps anyone?

What’s Oracle’s answer for that? Oracle DBClone or RMAN to always create a 100% copy (not space efficient) on the same (very expensive) Exadata? Copy (slooooooowwww) over the LAN to a non-Exadata system to reduce cost? Then what to do with HCC compressed blocks that have to be converted back as the external system cannot run HCC?? Will not work…

(note that the lack of data movement options outside of Exadata might be the reason that Oracle now allows customers to opt for Axioms or ZFS appliances as a kinda-sortof-bitlike-maybe-hopefully-notreally second storage tier. I might post something in the future on why this is a bad idea, but in short: databases distributed on multiple storage subsystems are vulnerable to consistency problems, and ZFS might not be the right option for database files due to extreme fragmentation).

So in our example:

DB size: 30TB uncompressed, requirement for (let’s give Oracle a break) only one database copy.

Oracle Exadata: Prod 20TB + test copy 20 TB = 40 TB (does not even fit in a full frame with SAS disk)

EMC infrastructure: Prod 15TB + test snap 3 TB (20% is more than enough) = 18 TB (plus the added value of data integrity protection, fast clones, expansion of only one storage type such as SATA disk, connectivity for non-Oracle apps or multiple Oracle database versions, server/database virtualization, etc etc etc)

Does our offering work in all occasions? Well, I know customers who depend so heavily on HCC for lots of their data that when I tried to configure an EMC infrastructure as alternative, I needed at least 3 times more (raw) disk space. So YMMV. Still, the excess of disk space might still have a lower TCO in comparison with Exadata, but again, that depends on the case.

The best solution would be if Oracle would start honouring their “Open Architecture” marketing and go really open – allowing 3rd party vendors to connect to Exadata and allow HCC compression (as they do with their own Axioms and ZFS appliances), but for that, I ask you, mr. customer, to put some pressure on your Oracle account reps to make this possible in the future.

For now, I think we can go without HCC in most cases.

Look mum, no Hands HCC !

Update: Some references.

http://dbmsmusings.blogspot.com/2010/01/exadatas-columnar-compression.html

http://www.oracle.com/technetwork/middleware/bi-foundation/ehcc-twp-131254.pdf

https://blogs.oracle.com/datawarehousing/entry/new_whitepaper_on_exadata_hybr

http://www.dbms2.com/2009/09/03/oracle-11g-exadata-hybrid-columnar-compression/

http://www.dbms2.com/2011/02/06/columnar-compression-database-storage/

Update 2:

My colleague Ofir Manor has done some investigation around real world savings with HCC. I found the results quite shocking. You can find his blogpost here: http://ofirm.wordpress.com/2013/01/31/exadata-hcc-real-world-storage-savings/ (recommended read!)

![]()

Have you guys tried a real life comparison of space savings with HCC on Oracle storage Vs advanced compression on your storage ?

No direct comparisons as far as I am aware. But we have many customers running Oracle on EMC as well as on Exadata so we have some insights 🙂

Take a look at this if you’re interested in real-world compression ratios with HCC:

http://ofirm.wordpress.com/2013/01/31/exadata-hcc-real-world-storage-savings/

Great article – agree with mostly everything. Just to be fair – I can witness in certain cases HCC does provide 1:20 compression ratio. I personally compressed a table (FOR QUERY HIGH) that had uncompressed size of 50TB+ to 2.5T and query performance on that table did not degrade at all.

Hi Boris,

Interesting! I would like to know what that means for the entire database – I can imagine if that table is 90% of the entire DB and you can compress it that much, it makes a real difference. If you have another 50TB or so being indexes and other, less compressible tables, percentage wise it’s a bit less. Can you share more details? Appreciated!

Hi Bart,

Would you be kind enough to help me with SQL command to estimate the space required to restore data from Exdata where HCC is enabled to non Exdata environment. I have tried various queries but the results are not conclusive

regards

Moncy

Hi Moncy,

I see this question (and similar variations) more and more often but I will reply in a separate email.

Regards

Bart